Why Kubernetes is Great for Running AI/MLOps Workloads

Kubernetes is an open-source platform that can automate container operations by eliminating most of the manual steps needed to deploy and scale containerized applications. It provides the required flexibility, portability and scalability to build, train, test and deploy machine learning (ML) models efficiently.

In this article, we’ll delve deep into why Kubernetes is considered the platform of choice for running AI/ML workloads.

Why Kubernetes for AI/ML Workloads?

Kubernetes is an open-source container orchestration platform that helps deploy, manage and scale AI and MLOps workloads, while handling complexity with ease. In recent years, Kubernetes has become the de facto choice for container orchestration platforms due to its ability to manage and deploy large-scale enterprise applications efficiently.

Scalability and Flexibility

Kubernetes scales horizontally across multiple nodes for AI workloads. It’s flexible for hybrid and multi-cloud environments, so managing AI models that require a lot of compute resources is easier.

Kubernetes allows you to scale ML workloads up or down as needed. This means ML pipelines can handle large-scale processing and training without impacting other parts of the project. It is also an excellent choice for batch processing, i.e., it can make your AI model training run faster by running tasks in parallel.

Resource Management

Since AI and ML workloads consume a lot of resources, managing resources in a Kubernetes environment where these workloads have been deployed, is critical. Kubernetes can manage resources by allocating CPUs, GPUs and memory efficiently — a feature that helps to utilize resources optimally. By using resources efficiently, Kubernetes helps reduce expenses and maximize optimal performance for workloads. Moreover, its ability to manage resources dynamically supports AI models with the resources they need, while optimizing cost and performance.

Containers

Containers help package applications along with all their dependencies — you can use them to build and deploy applications that are platform agnostic, i.e., you can be sure that your application will run the same way on different platforms or operating systems. Additionally, they can help isolate responsibility between development and operations teams, thereby boosting throughput and productivity.

While the former can concentrate on building applications, the latter can focus on the infrastructure requirements and how to allocate and deallocate resources efficiently. Consequently, the process of adding new code or modules to an application through its lifecycle becomes easy and seamless.

Portability and Fault Tolerance

Fault tolerance — the ability of a system to recover from failures — is one of the most important capabilities an enterprise application should possess today. With its built-in fault tolerance and self-healing capabilities, Kubernetes can help keep your ML pipelines running even in case of hardware or software failure.

Security

Network policies, multi-tenancy, secrets management and role-based access control (RBAC) help in keeping data and models secure at every stage of the pipeline. They also facilitate the implementation of evolving concepts such as federated learning — the training of AI models on data distributed across different locations — which is a prerequisite for privacy-compliant AI.

Additionally, AI and ML workloads require data which is, in most cases, large, distributed and requires flexible access. Kubernetes encapsulates the underlying complexity of data storage through PersistentVolumes, StorageClasses and built-in support for cloud, network or local storage solutions.

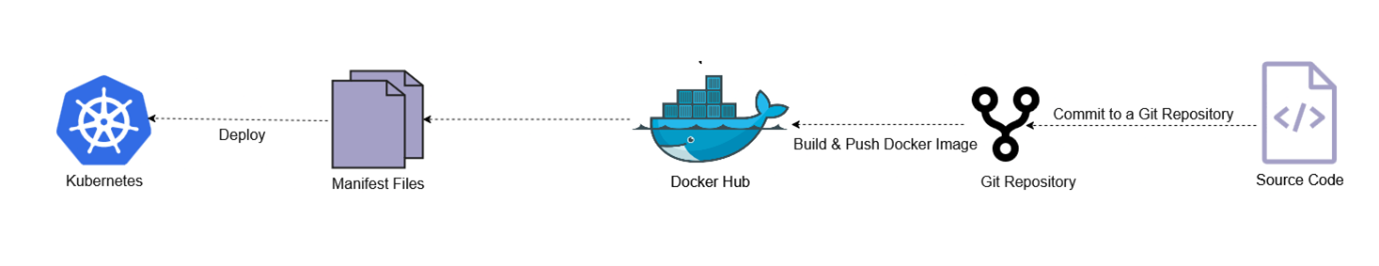

Figure 1: AI/ML workloads running on Kubernetes environment

Tools Required for Running AI/ML Workloads on Kubernetes

You require specific tools to enable Kubernetes to manage ML workflows since Kubernetes is not adept at doing it. The following are the key tools that can integrate with Kubernetes to handle ML tasks on its environment.

- MLflow

- TensorFlow

- Kubeflow

- KubeRay

Examples of ML Workflows in Kubernetes

The following are a few examples of running AI/ML workloads in a Kubernetes environment.

- Scaling workloads at runtime based on demands

- Deploying models to the production environment while enabling rollbacks and updates

- Optimizing ML model performance

- Implementing complete CI/CD workflows for ML using Kubeflow pipelines

- Training deep learning models using PyTorch, TensorFlow, etc.

- Processing vast datasets offline

- Distributing model training tasks among multiple pods simultaneously

The Challenges

Kubernetes adds layer of complexity that is challenging to deal with, particularly in AI contexts where the workloads must be orchestrated properly. Several challenges must be addressed when working with Kubernetes. For example, Kubernetes presents a steep learning curve, which requires an understanding of concepts such as architecture components and deploying apps in a Kubernetes environment.

In addition, sound knowledge of containerization, orchestration and cloud-native architecture is essential for managing the Kubernetes environment efficiently. Kubernetes clusters management often involves continuous monitoring, upgrading and scaling — tasks that may take a lot of resources and require a dedicated operations team. Additionally, ensuring high availability and effectively managing GPU resources, security and networking are critical within a Kubernetes cluster.

Conclusion

Kubernetes is the platform of choice or the de facto platform for deploying AI and MLOps workloads, offering unmatched flexibility, scalability, reliability and cost efficiency. Kubernetes abstracts infrastructure complexity, unlocks hardware acceleration and facilitates inculcating the DevOps/MLOps best practices into the world of ML.

Whether you’re building a recommendation engine, deploying real-time computer vision at the edge or managing a global fleet of generative AI services, Kubernetes has got you covered — accelerating innovation, managing complexity and scaling with confidence.

That said, if you want to empower your ML and data science practices, future proof your AI strategy and maximize your infrastructure investment — the time to get started with Kubernetes for your AI and MLOps workloads is now.