Kaloom Allies with Red Hat to Create Virtual Networks on the Edge

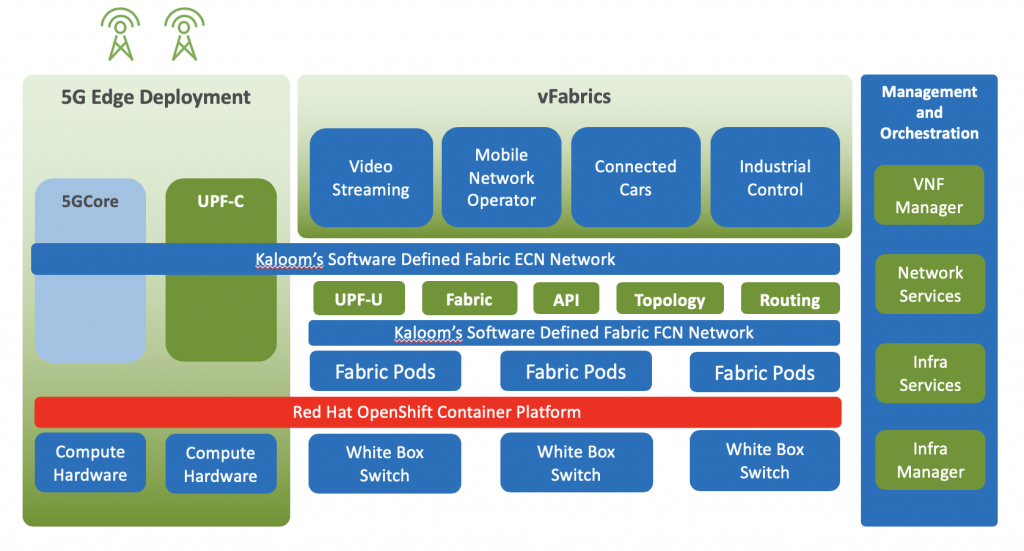

Kaloom announced today it has partnered with Red Hat to make it easier to deliver network services to edge computing applications built using the Red Hat OpenShift Platform.

Company CTO Suresh Krishnan says most of the applications built for the edge will be based on microservices running on a distribution of Kubernetes in the form of Red Hat OpenShift. Each of those microservices will now be able to access the Cloud Edge Fabric from Kaloom, which is a set of network services that run on a virtual instance of a switch that runs natively in a Kubernetes platform, he says.

Unveiled during the Red Hat Summit online conference, the alliance provides a framework for running microservices-based applications capable of accessing both 4G and 5G wireless networking services at the edge, adds Krishnan.

The Kaloom Cloud Edge is based on the Kaloom Software Defined Fabric (SDF), which was developed using the P4 language to create a programmable data plane in networking environments based on Kubernetes. SDF is compatible with multiple software-defined networking (SDN) controllers found in OpenStack environments or in any instance of the open source OpenDaylight software. Kaloom has also developed a Kactus container network interface (CNI) plugin. Kaloom SDF enables zero-touch provisioning of the virtual networks. Additional compute and storage resources can be dynamically assigned or removed via the associated vFabric to create an elastic pool of network resources.

As more bandwidth becomes available at the edge, telecommunications carriers and enterprise IT organizations alike are racing to make more compute power available for applications that need to process data in near real-time. Applications that make use of advanced analytics running as close to the point where data is being created cannot tolerate the latency that would be incurred if those analytics workloads were being accessed in the cloud via a wide area network (WAN). The need to run those applications locally is driving demand for Kubernetes platforms to efficiently run those applications on edge computing platforms, where there is a finite amount of available compute and storage resources. In the not-too-distant future, there may be even more application workloads deployed at the edge than there are in the cloud.

Of course, edge computing applications will also need to be integrated with application workloads running in the cloud, which is why Red Hat is making a case for hybrid cloud computing environments based on instances of OpenShift running at the edge, local data centers and in the public cloud.

Eventually, the rise of hybrid cloud computing should drive a convergence of network operation (NetOps) and DevOps as network overlays and underlays become more programmable. The debate will be over how much control developers need. Network bandwidth is a shared resource, so increased demand for it is likely to adversely impact other applications. Most NetOps teams will prefer to maintain control network bandwidth until at least there’s enough available in some far-off 5G future that makes network bandwidth allocation no longer the issue it is today.