VMware Launches App Connectivity Platform Based on Istio

VMware today launched a VMware Modern Apps Connectivity suite that combines its Tanzu Service Mesh and VMware NSX Advanced Load Balancer ALB software into a single offering. The Tanzu Service Mesh is based on a curated open source instance of the Istio service mesh that runs on Kubernetes. VMware NSX Advanced Load Balancer ALB is based on networking software VMware gained with the acquisition of Avi Networks in 2019.

Chandra Sekar, senior director for product management at VMware, says the goal is to make it simpler for IT organizations to acquire and set up the infrastructure required to provide secure connectivity at a time when the number of microservices exposing application programming interfaces (APIs) in a production environment continues to steadily increase.

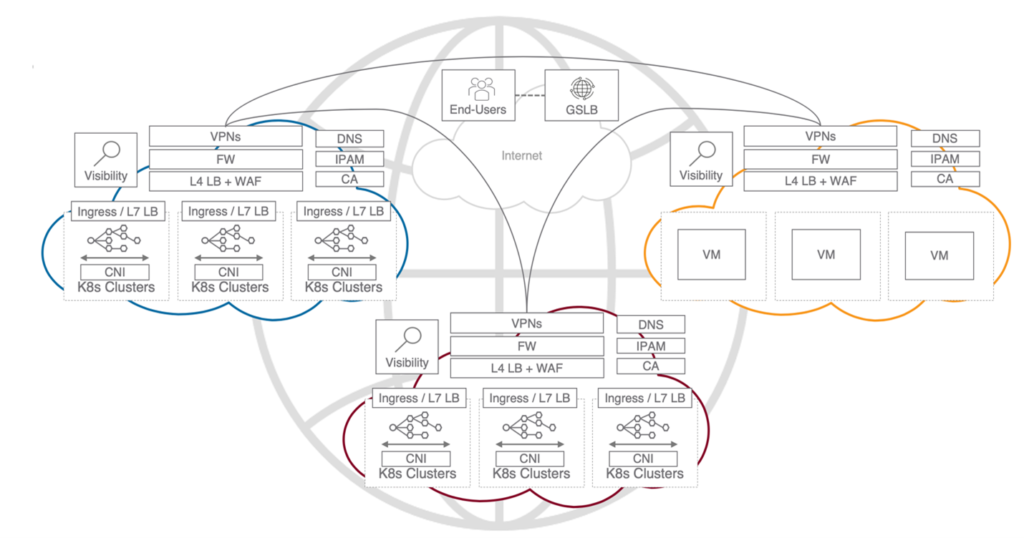

The combined offering brings together L4 load balancing, ingress controller, global load balancing (GSLB), web application security, integrated IP address management (IPAM) and domain name system (DNS), encryption and an extensible policy framework for intelligent traffic management and security. IT teams can now centrally manage end-to-end application traffic routing, resiliency and security policies via Tanzu Service Mesh.

The VMware Modern App Connectivity offering has been certified to work with VMware Tanzu, a curated instance of Kubernetes, as well as Amazon Elastic Kubernetes Service (EKS), with support in preview available for Red Hat OpenShift, Microsoft Azure Kubernetes Service (AKS) and Google Kubernetes Engine.

The launch of VMware Modern Apps Connectivity comes at a time when organizations are realizing that, as microservices in application environments start to multiply, they need a way to present a consistent set of networking services for them to employ. Today, most microservices rely on an API gateway to communicate with one another but as the number of microservices start to scale, there becomes a need to implement a service mesh to manage communications between APIs at scale.

Naturally, there’s a lot of contentious debate over the right type of service mesh to implement. VMware has thrown its weight behind Istio, which was originally created by Google, IBM and Lyft. In some cases, IT teams may wind up deploying different service meshes depending on the degree to which the decision to employ one is driven by developers or an IT operations team.

Regardless of who makes the decision, the one thing that is apparent is a service mesh presents developers with a single layer of abstraction above a dizzying array of low-level network protocols and APIs. Via the service mesh, it becomes simpler for developers to programmatically invoke network services in a way that should ultimately make it easier to integrate network operations within a larger DevOps workflow. Today, it’s still not uncommon in on-premises IT environments for a DevOps team to be able to deploy in a virtual machine in a matter of minutes while waiting several weeks for networking services to be made available.

It’s too early to say to what degree a service mesh may fundamentally change the way network services are managed. However, the implications of deploying a service mesh go far beyond simply enabling some APIs to communicate more easily with one another.