Rafay Systems Automates Kubernetes Fleet Management

Rafay Systems today added an Automated Fleet Operations capability to its Kubernetes management platform that makes it simpler to keep large numbers of clusters updated.

At the same time, Rafay Systems also announced that its Kubernetes Operations Platform (KOP) has now been certified for inclusion in the Red Hat Ecosystem Catalog created for third-party extensions for the Red Hat OpenShift platform based on a distribution of Kubernetes.

Mohan Atreya, senior vice president of products and services for Rafay Systems, said this capability makes use of a rules engine embedded in the company’s KOP to first verify that applications can successfully run on an updated instance of Kubernetes before then automatically updating clusters.

Many organizations that are running multiple instances of Kubernetes clusters are struggling to manage multiple versions of Kubernetes, with some still running releases as old as version 1.12. The latest officially supported versions of Kubernetes run from 1.24 to 1.27.

In theory, organizations are supposed to be updating Kubernetes clusters three times a year to stay current with release cycles. In practice, many Kubernetes clusters are not updated because IT teams are concerned that a deprecated application programming interface (API) that an application depends on will no longer function.

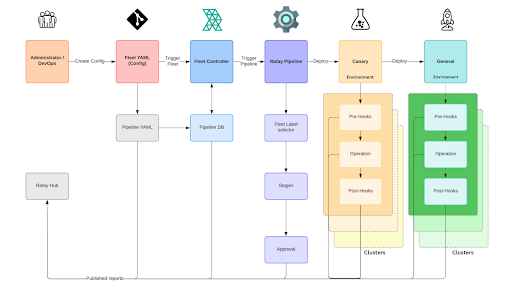

The Automated Fleet Operations capability makes it simpler to manage the upgrade process and also eliminates many of the manual, error-prone processes that today make managing Kubernetes clusters challenging, noted Atreya. Instead, IT teams can create standard workflows to automate tasks such as upgrades and configuration patching across batches of clusters all at once rather than painstakingly making sure each cluster has the precise setting required.

This latest edition of KOP comes as more organizations are starting to adopt platform engineering based on DevOps best practices to centralize the management of application development and deployment environments. The goal is to reduce the total cost of managing those environments while simultaneously reducing the time required to build and deploy applications. In the case of cloud-native applications, the level of skill currently required to manage Kubernetes clusters has also limited the pace at which modern applications are being deployed.

Of course, artificial intelligence (AI) may soon make managing Kubernetes clusters even more accessible to IT teams. In the meantime, however, a wide range of tasks can be automated more easily using a rules-based approach, noted Atreya.

Regardless of the approach to automation, organizations that are running tens of Kubernetes clusters will need to find more effective ways to manage them at scale. There simply are not enough IT professionals available today with the skills required to manually manage individual clusters. Instead, IT teams will require platforms that enable DevOps teams and IT administrators to collaborate using a mix of application programming interfaces and graphical tools to automate various tasks.

The issue, as always, is making sure the necessary management platforms are in place before the number of clusters being deployed becomes a major headache for everyone involved.