ngrok Adds Kubernetes Support to Ingress-as-a-Service Platform

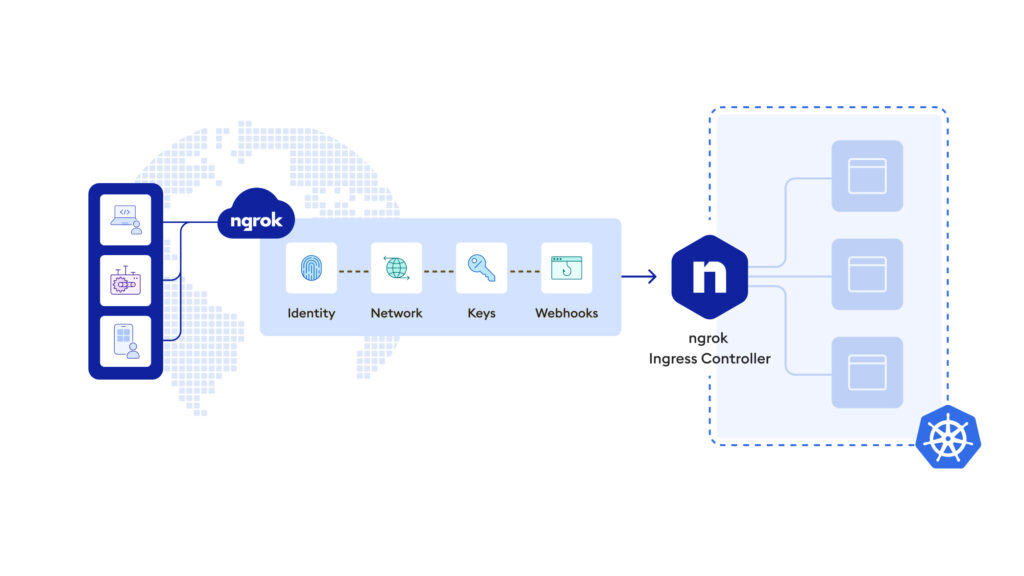

ngrok today added Kubernetes support to a cloud platform that makes it possible to provision ingress controllers via an application programming interface (API).

Alan Shreve, ngrok CEO, says ngrok Ingress Controller for Kubernetes generates a single image that enables a Kubernetes ingress controller to be deployed anywhere without requiring developers to understand what subnets and IP addresses are being employed. IT teams can then also take advantage of ngrok’s global network with thousands of points-of-presence (PoPs) to deploy those images as close as possible to where application services are being consumed, he adds.

That approach eliminates the need for complex configurations, sidecars or extra containers as ngrok makes it possible to seamlessly integrate tasks such as certificate management and the setting up of load balancers within Kubernetes workflows, said Shreve. Today IT teams need to cobble together a range of Layer 4 through Layer 7 services themselves to accomplish those same tasks, he noted.

The company claims more than six million developers already use the nrok service to automate the provisioning of a wide range of ingress controllers. Support for Kubernetes now makes it possible to leverage the built-in base of developers to similarly automate networking and security configurations across the entire networking stack, says Shreve.

As a result, the Kubernetes cluster becomes independent of the underlying network and configurations to make it simpler to move clusters from one IT environment to another, he adds. That’s critical because it helps prevent IT organizations from getting locked into a specific cloud platform, says Shreve.

As organizations begin to deploy fleets of Kubernetes clusters, networking challenges become increasingly problematic. Many of the DevOps teams tasked with deploying these clusters don’t tend to have a lot of networking and security expertise, so the probability mistakes will be made is high. The ngrok service reduces the chances of these mistakes by presenting developers with a familiar API construct to provision those services, says Shreve.

It’s not entirely clear which IT teams will ultimately be responsible for networking in Kubernetes environments. Historically, networking has been managed by dedicated specialists using graphical tools to provision services. The issue that creates is that while a DevOps team may be able to provision a virtual machine in a matter of minutes, they might end up waiting days for a networking team to provide access to network services. An API approach to provisioning network services should make it easier for IT teams to finally converge DevOps and network operations using an ingress-as-a-service platform.

Kubernetes clusters are already challenging to manage, so any effort to reduce network complexity can only help to make the platform more accessible to IT organizations. While Kubernetes may be one of the most powerful IT platforms to find its way into mainstream IT environments in recent memory, its inherent complexity also conspires to limit adoption. The challenge now is finding a way to abstract away all the complexity to make it simpler for more organizations to build and deploy highly distributed cloud-native applications wherever and however they best see fit.