Solo.io Embraces Cilium Container Networking Platform

At the KubeCon + CloudNativeCon Europe 2022 conference, Solo.io announced it has added support for the open source Cilium container networking platform to the Gloo Mesh curated instance of the open source Istio service mesh.

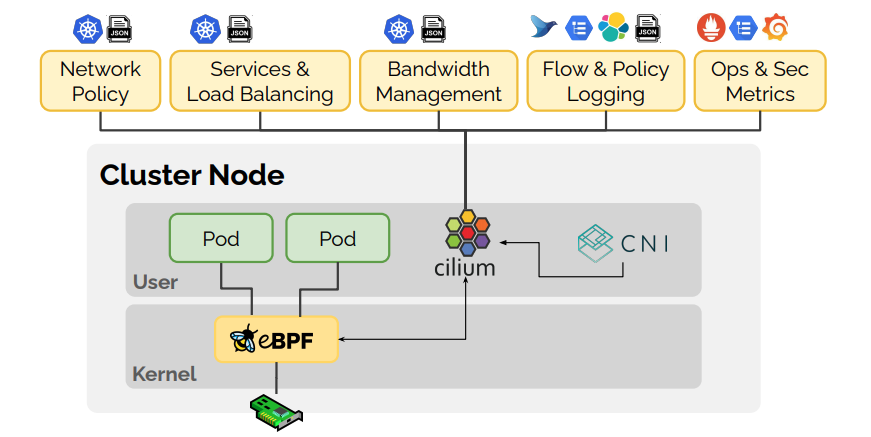

Cilium provides network connectivity and load balancing between application workloads. It operates at Layer 3/4 to provide traditional networking and security services as well as at Layer 7 to protect and secure application protocols.

As one of the first networking frameworks to take advantage of extended Berkley Packet Filtering (eBPF) in the Linux kernel, Cilium is substantially faster than legacy network overlays that IT teams use to connect Kubernetes clusters. It enables dynamic insertion of eBPF bytecode into the Linux kernel within a sandbox to handle network IO, application sockets and tracepoints to embed security, networking and observability software deeper into the operating system.

Brian Gracely, vice president of product and strategy for Solo.io, says that while Cilium is one of several network frameworks that Gloo Mesh supports, this marks the first time Solo.io has reached down to Layer 3 of the networking stack. Solo.io will expand its role in contributing to and maintaining the Cilium project as part of an effort to ensure continuous evolution of a cohesive, secure and performant Layer 2 – Layer 7 application networking architecture, he notes. Solo.io, however, will also continue to support container networking platforms based on iptables within Gloo Mesh, he adds.

It’s still early days as far as the adoption of instances of Linux that support eBPF is concerned. However, it’s already apparent that most networking, security, storage and observability tools will soon be upgraded to take advantage of eBPF.

It’s not clear to what degree support for Cilium might spur further adoption of Istio, but as the number of Kubernetes clusters deployed in production environments continues to expand, networking is becoming more challenging. Initially, a service mesh was adopted by IT teams to manage application programming interfaces (APIs) in place of proxy software or a legacy API gateway. As more organizations find themselves managing hundreds or thousands of external- and internal-facing APIs that provide access to both monolithic applications and microservices-based applications deployed on Kubernetes clusters, the need for a service mesh becomes more apparent.

At the same time, a service mesh provides a programmable layer of abstraction above network and security infrastructure and eliminates the need for developers to master multiple low-level networking APIs. That abstraction layer is also extensible to the point where developers can create rules within a service mesh to create, for example, a firewall for a specific set of APIs using tools such as the WebAssembly programming framework.

While there are multiple service mesh options, Gracely says Istio will soon become the de facto standard in much the same way Kubernetes overshadowed other container orchestration platforms.

There are, naturally, advocates for rival service mesh platforms that disagree. The one thing that is certain is that as Kubernetes environments continue to evolve, the need for more sophisticated approaches to networking that can be integrated within a larger DevOps workflow is approaching a tipping point.