LoftLabs Adds Virtual Nodes to Isolate Kubernetes Workloads

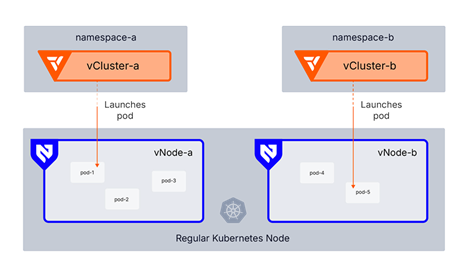

LoftLabs today at the Kubecon + CloudNativeCon Europe 2025 conference added a lightweight runtime, dubbed vNode, that makes it possible to more granularly isolate workloads running in a virtual instance of a Kubernetes cluster.

Company CEO Lukas Gentele said vNode extends the virtualization capabilities developed by LoftLabs, down to individual nodes running privileged workloads within a multi-tenant virtual cluster, built using its distribution of open-source vCluster software.

In addition, LoftLabs is updating its distribution of vCluster to add snapshot and restore capabilities to simplify backup and recovery or, if needed, migration of clusters.

Finally, LoftLabs is also integrating the open source edition of the platform with the Rancher management framework for Kubernetes to enable IT teams to create, manage and update virtual clusters in Rancher, without requiring them to also deploy vCluster Platform software.

Collectively, these capabilities will make it simpler for more IT organizations to create Kubernetes environments more cost-effectively, even for their most sensitive workloads, said Gentele.

That approach is fundamentally more efficient than relying on alternative virtualization technologies, such as micro virtual machines, because it reduces the number of system calls that would otherwise need to be made to the underlying operating system, he added.

At a time when more organizations than ever are concerned about the total cost of IT, vCluster software provides a means for rapidly spinning up virtual clusters using a shared set of infrastructure.

Those virtual clusters can then be managed by DevOps or platform engineering teams using command line interfaces (CLIs), custom resource definitions CRDs), Helm charts, infrastructure-as-code (IaC) tools and YAML files, or by IT administrators using a graphical user interface. That latter approach expands the available pool of IT talent capable of managing Kubernetes clusters. Many Kubernetes clusters are initially deployed by DevOps engineers who have experience working with YAML files, but as the number of clusters increases, the need to enable IT administrators who have less programming expertise has become more pressing.

Virtual Kubernetes clusters today are used most widely in pre-production environments to reduce the total number of physical Kubernetes servers an organization needs to deploy. However, the number of instances of virtual clusters being used in production environments is growing as more organizations look to reduce the total cost of IT, and more organizations find themselves managing fleets of Kubernetes clusters.

With more organizations than ever deploying Kubernetes clusters at an unprecedented scale, there is a growing need to isolate workloads on individual clusters to minimize potential disruption should the underlying infrastructure become unavailable. However, as organizations find themselves managing fleets of Kubernetes clusters, the cost of managing them quickly becomes prohibitive.

It’s not clear just how many virtual Kubernetes clusters may have been spun up in recent years but given the potential number of virtual clusters that could run on a single physical cluster, it may not be too long before those virtual clusters far outnumber existing physical clusters, with the number of virtual nodes running to also soon exponentially increase.