Zerto Preps Data Protection Platform for Kubernetes

Zerto is preparing to make a version of its IT Resilience Platform that runs natively on Kubernetes available next year.

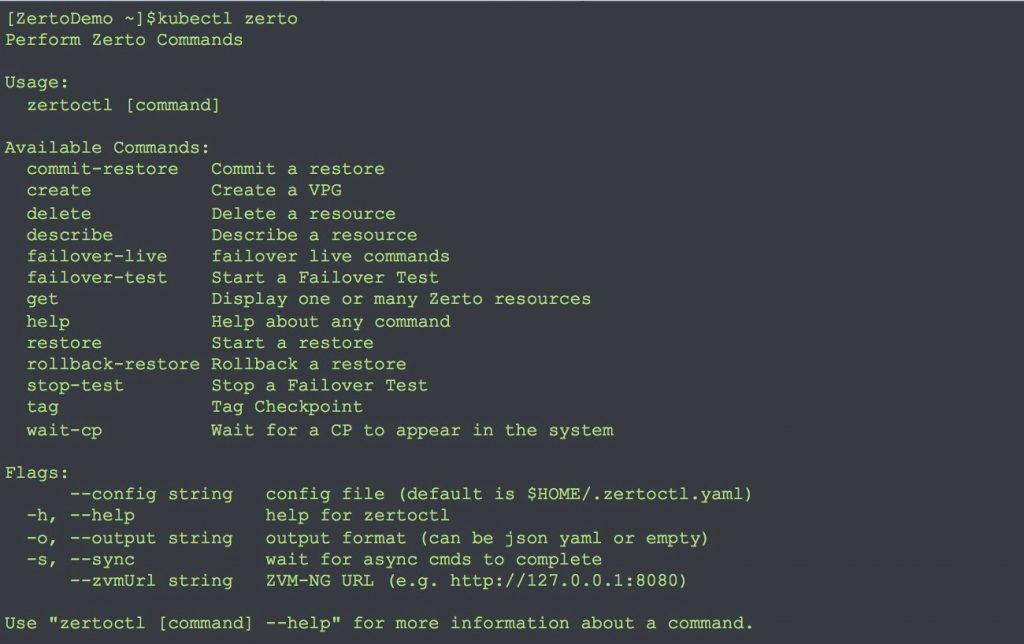

Deepak Verma, director of advanced technology at Zerto, says the goal is to make backup and recovery an extension of any automated continuous integration/continuous delivery (CI/CD) process using a kubectl plugin and native Kubernetes tooling.

A formal early adopter program for an instance of the IT Resilience Platform, which is currently in preview, will be launched later this year. The first Kubernetes environments that will be supported by Zero include Amazon Elastic Kubernetes Service (Amazon EKS), Google Kubernetes Engine (GKE), IBM Cloud Kubernetes Service, Microsoft Azure Kubernetes Service (AKS), Red Hat OpenShift and VMware Tanzu.

Verma says the Zerto approach to data protection and backup recovery will streamline a process today that requires the direct intervention of an IT administrator. Treating data protection as code will enable DevOps teams to take advantage of continuous journaling of persistent volumes, statefulsets, deployments, services and configMaps along with replication capabilities delivered via an IT Resilience Platform that has already been proven in legacy virtual machine environments, says Verma.

Fresh off raising an additional $55 million in funding, Zerto claims to have more than 8,000 global customers.

The IT Resilience Platform is designed to provide granular journaling of thousands of recovery checkpoints to overcome challenges of synchronous replication while providing more point-in-time recovery options than traditional backup software, says Verma.

IT teams can rehydrate a full running copy of an entire application in minutes from any point in time during an application testing process or to recover from data corruption or a ransomware attack, he adds.

It takes a fair amount of time to back up any software build. It’s not uncommon for each build to be about 500GB in size. The more concurrent application development projects there are, the more builds there are to back up and archive. Many organizations are discovering there is no longer enough time in a 24-hour period to complete all the backup tasks required.

DevOps processes aggravate that issue further because, after all, the primary goal is to develop applications faster, which invariably leads to more builds that often fail. What was once a task to perform on an occasional basis is now quickly becoming an ongoing process that needs to be automated.

Of course, once an application is deployed in a production environment, all the normal backup and recovery requirements for both the Kubernetes cluster and the applications deployed on it also apply. At a time when organizations are deploying more microservices-based applications that have inherent dependencies, recovery time objectives (RTO) for individual microservices have never been more critical. Most microservices applications are designed to gracefully absorb the loss of any microservice. However, there’s usually a performance hit as application programming interface (API) calls are redirected until a microservice is brought back online.

As DevOps teams continue to programmatically invoke infrastructure as code, it’s not only a matter of time before the same concepts are applied to data protection. Rather than having to wait on an IT administrator, most DevOps teams will simply prefer to automate the whole data protection process on end to end whenever possible.