Security in Kubernetes: Your Stack is Lying to You

Cloud-native adoption is accelerating, but security practices remain anchored in the past. Many companies still rely on perimeter-based models designed for static infrastructure, not for Kubernetes’s dynamic, distributed nature.

According to the Cloud Native Computing Foundation (CNCF), in 2024, production use of Kubernetes rose to 80%, up from 66% the year before. Including those piloting or evaluating it, adoption reached a whopping 93%, making Kubernetes a core component of how modern systems run.

In the meantime, ephemeral workloads, application programming interface (API)-driven operations and microservices architectures are exposing the limits of legacy controls. Security must evolve in parallel, but it hasn’t.

This paper outlines why traditional tools fall short and what is required to secure Kubernetes environments effectively.

Source: CNCF

Why Traditional Security Breaks in Kubernetes

Challenges

Legacy security was built on assumptions that no longer hold: Fixed IPs, long-lived workloads and centralized control.

Traditional tools rely on static constructs: IP allowlists, perimeter firewalls and host-based agents, which assume predictable infrastructure and slow change cycles. In conventional environments, workloads are tied to physical hosts, network boundaries are clear and traffic patterns are relatively stable. That model falls apart in Kubernetes.

Volatility is core to Kubernetes.

Kubernetes challenges traditional norms with dynamic pod life cycles, self-healing systems and declarative infrastructure.

✔️Pods spin up and down in seconds, each with unique IPs and metadata.

✔️Containers abstract away the host, run on shared kernels and move freely across nodes and clusters.

✔️Workloads are deployed declaratively through continuous integration and continuous deployment (CI/CD) pipelines, where infrastructure is defined as code and changes occur continuously.

✔️Static security controls cannot keep pace; they lag or are bypassed entirely.

Kubernetes architectures are also API-driven and microservice-based. Dozens or even hundreds of internal services communicate through internal APIs, turning each one into a potential attack surface. Without identity awareness and workload-level controls, lateral movement becomes easy and often invisible. Traditional firewalling offers no visibility into pod-to-pod traffic and cannot enforce intent-aware policies at runtime.

Consequences

Legacy tools become brittle, misaligned and dangerously permissive. Because workloads in Kubernetes communicate via domain name system (DNS) and API calls rather than fixed endpoints, traditional monitoring tools lose the ability to trace which identity is doing what. Host-based agents often miss container context, especially when lifespans are measured in minutes. Audit trails become incomplete, detection capabilities degrade and security teams fall back on overly broad policies just to keep systems running.

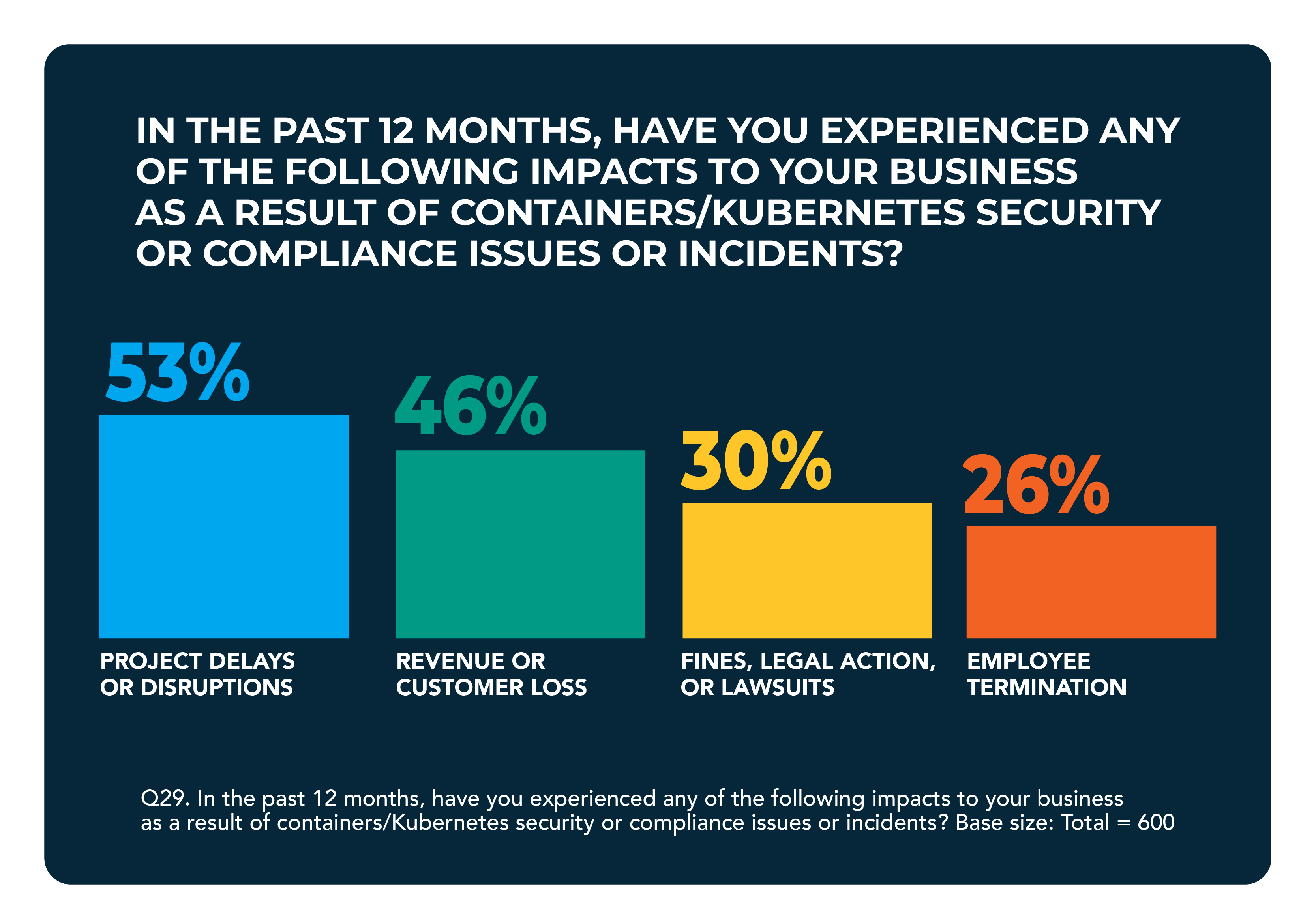

According to The State of Kubernetes Security Report 2024, 67% of organizations have either delayed or slowed the deployment of containerized applications due to security concerns. However, the consequences of Kubernetes and container security challenges extend well beyond project delays. Nearly one-third (30%) reported receiving fines, while 26% said security incidents led to employee dismissals, both of which can erode operational stability by disrupting teams and draining institutional knowledge.

In even more severe cases, 46% of respondents acknowledged experiencing customer or revenue loss tied to a security breach. When resources are diverted toward incident response and remediation, growth initiatives — including product rollouts — often get pushed aside, compounding the overall business impact.

Source: RedHat

Takeaways

Traditional security policies and practices are insufficient for Kubernetes environments primarily because Kubernetes introduces complexities and dynamics that differ significantly from conventional IT infrastructure.

❗Dynamic Infrastructure

- Traditional Approach: Security policies are typically static and infrastructure-focused, designed for fixed, predictable environments.

- Kubernetes Challenge: Kubernetes employs highly dynamic infrastructures with ephemeral containers, frequently changing pods and rapid scaling, making static policies ineffective.

❗Containerization Complexity

- Traditional Approach: Traditional security tools often monitor hosts and networks, not individual containers.

- Kubernetes Challenge: Containers introduce additional abstraction layers (e.g., Docker, CRI-O, containerd), increasing complexity. Traditional tools cannot effectively see inside these layers.

❗Microservices Architecture

- Traditional Approach: Security models were historically designed for monolithic architectures with predictable communication paths.

- Kubernetes Challenge: Kubernetes orchestrates distributed microservices with complex inter-service communications. Traditional perimeter-based security becomes impractical in this environment.

❗Lack of Visibility and Observability

- Traditional Approach: Traditional monitoring solutions rely on fixed resources, known assets and relatively stable traffic patterns.

- Kubernetes Challenge: Container environments are transient, with workloads continuously spinning up and down. This makes traditional asset management, logging and monitoring solutions insufficient.

❗API-Driven Operations

- Traditional Approach: Security typically focuses on protecting infrastructure using firewalls, intrusion detection systems/intrusion prevention systems (IDS/IPS) or endpoint protection.

- Kubernetes Challenge: Kubernetes primarily operates through APIs. As a result, securing APIs becomes a priority over traditional perimeter-based network security. Attack vectors shift from physical endpoints to API endpoints.

❗Configuration Complexity

- Traditional Approach: Static policies are applied to configuration management, with well-defined guidelines and infrequent updates.

- Kubernetes Challenge: Kubernetes configurations are complex and frequently changing (e.g., manifests, YAML files, Helm charts). Misconfigurations are common and can lead to vulnerabilities often outside the scope of traditional compliance frameworks.

❗Automated Scaling

- Traditional Approach: Security audits and compliance checks are manual or semi-automated and performed periodically.

- Kubernetes Challenge: Kubernetes auto-scales resources quickly, making it nearly impossible to manually review or control every deployment. Security must be integrated into CI/CD pipelines and automated.

❗Identity and Access Management Complexity

- Traditional Approach: Centralized identity and access management (IAM) solutions managing users, roles and permissions based on a static structure.

- Kubernetes Challenge: Kubernetes implements fine-grained role-based access control (RBAC) and namespaces, requiring nuanced policies for each workload, user or service. Traditional IAM solutions often lack the granularity necessary.

❗Shared Responsibility Model

- Traditional Approach: Responsibility is clearly defined, typically involving fewer parties.

- Kubernetes Challenge: Kubernetes security spans cloud providers, clusters and developers, requiring a more nuanced shared responsibility model. Traditional approaches do not adequately address multiple roles and responsibilities involved.

Kubernetes-Native Security: What You Actually Need

1. Container-Aware Security Tools

Container-aware security solutions operate at the pod and container level, providing real-time visibility into image provenance, running processes, network activity and misconfigurations.

These tools understand Kubernetes constructs such as namespaces, deployments and labels, enabling precise policy enforcement tied to workload identity. They also support container runtime introspection and can trace activity back to a specific container image or developer pipeline. This is essential in a system where workloads may exist for only minutes. Without this level of granularity, threats can slip through undetected or generate noise that overwhelms response teams.

2. Zero-Trust Architectures

In Kubernetes, the perimeter is fluid, and internal traffic often outnumbers ingress requests. Zero-trust models operate on the assumption that every request could be malicious.

Rather than relying on static network boundaries, identity becomes the primary control point. Enforcing identity-aware communication between services (via mutual transport layer security (mTLS) and secure production identity framework for everyone/SPIFFE runtime environment (SPIFFE/SPIRE)-based workload identity) is key to preventing lateral movement.

Zero-trust also enables fine-grained access controls on Kubernetes APIs, ensuring users and services receive only the exact permissions they need.

When combined with admission control and policy engines, this model protects both the control plane and the data plane from privilege abuse and injection risks.

3. Automated Configuration Validation and Compliance (Policy-as-Code)

Misconfiguration remains one of the most common causes of security incidents in Kubernetes environments. Static YAML files, Helm charts and Kustomize overlays are powerful — but when left unchecked, they introduce significant risk. Policy-as-code tools such as Open Policy Agent (OPA), Kyverno and Datree enable teams to codify security and compliance rules and enforce them at every stage of the pipeline, from development to deployment.

These tools integrate with admission controllers to block non-compliant resources before they reach the cluster. They also support drift detection and policy auditing across environments, which is especially critical in multi-cluster setups. Automation ensures consistency and removes the human bottleneck from configuration review.

4. Runtime Container and Node Protection

Runtime protection ensures that containers and nodes behave as expected by detecting deviations such as unexpected process execution, abnormal network activity or privilege escalations.

Tools like Falco or Extended Berkeley Packet Filter (eBPF)-based detectors hook into the kernel or runtime to observe behavior in real-time, without significant performance overhead. This is crucial for spotting zero-day attacks or logic flaws that slip past static scanning. Runtime defense also enables response — such as isolating or terminating a compromised pod — based on policies.

5. Continuous Security Testing Integrated with CI/CD

In Kubernetes, infrastructure is code, and code must be continuously tested.

Embedding security checks into CI/CD pipelines ensures vulnerabilities are caught early, long before they reach production. This includes image scanning for commonly known vulnerabilities (CVEs), secret detection, misconfiguration checks and dependency analysis.

Automated testing gates can prevent deployments that violate policy, maintaining compliance without slowing down development velocity. Trivy, Snyk and Checkov integrate seamlessly with CI tools such as GitLab CI, GitHub Actions and Jenkins. This shift-left approach is critical, as post-deployment remediation in Kubernetes is often too late or too noisy.

6. Kubernetes-Native Observability and Logging

Legacy monitoring stacks fall short in Kubernetes because they lack the necessary context. Visibility is required at the pod, namespace and service level.

Kubernetes-native observability tools — such as Prometheus, Loki and OpenTelemetry — are designed to understand workload dynamics and can correlate metrics, logs and traces with specific deployments or users. It enables faster anomaly detection, easier root cause analysis and more reliable audit trails.

Crucially, observability must also span the control plane, including API server audit logs and etcd access, to detect abuse or misconfiguration.

In a system where pods can come and go in seconds, visibility must be real-time and workload-aware. Logging must be structured, queryable and tightly integrated into the Kubernetes life cycle.

Final Thoughts

🔹 Kubernetes is not insecure. It is simply built on a different set of assumptions. Workloads are transient. APIs are the control plane. Environments are distributed and fast-moving. These traits make legacy security models — designed for static infrastructure, fundamentally misaligned.

🔹 Traditional tools like firewalls, security information and event management (SIEMs) and endpoint agents are not obsolete, but without adaptation, they become a liability. They miss context, over-permit access and create a false sense of protection.

🔹 Security in Kubernetes must be purpose-built. That means authenticating workloads, enforcing policy at deployment time and continuously monitoring behavior at runtime. Zero-trust architecture, policy-as-code and automation are operational requirements for securing ephemeral, API-driven systems.

🔹 The organizations that succeed will not be the ones with the most tools. They will be the ones that treat security as code, embed it into every commit and align their practices with how Kubernetes works.