Red Hat Previews Data Science Service on OpenShift

A Red Hat OpenShift Data Science cloud service for Red Hat OpenShift platforms is now available as a field trial.

Will McGrath, principal architect for Red Hat OpenShift Data Science, says Red Hat OpenShift Data Science will make it simpler for organizations to build and deploy applications infused with machine learning (ML) algorithms on a Red Hat OpenShift distribution of Kubernetes. That platform can be accessed as an add-on to Red Hat OpenShift Dedicated or Red Hat OpenShift Service on Amazon Web Services (AWS). Like most cloud services, IT teams will be able to consume ML tools and frameworks through a T-shirt size model.

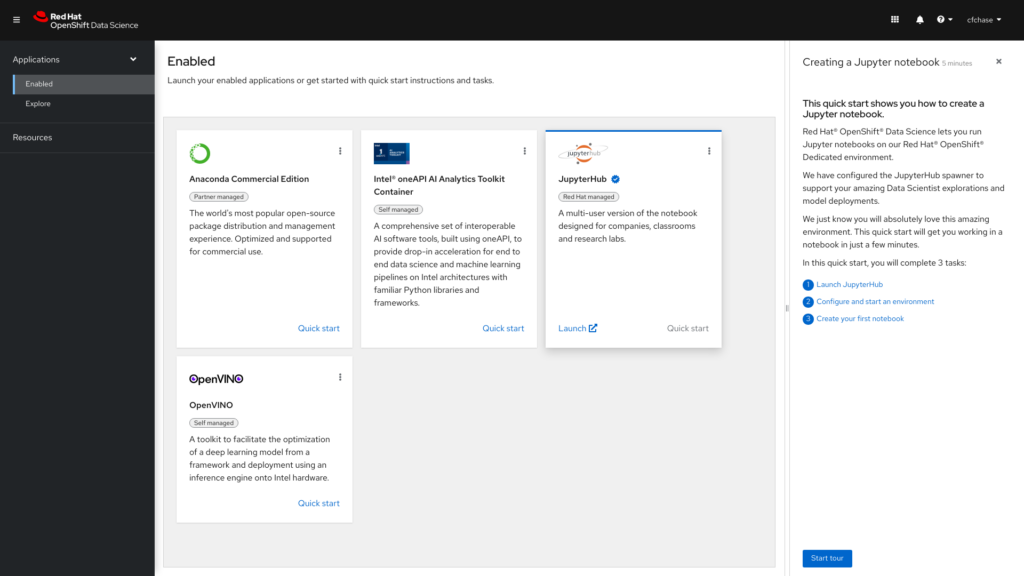

Red Hat OpenShift Data Science provides organizations with a managed service to develop, test and deploy ML models without having to set up and maintain a platform themselves. Red Hat is also providing access to hardware and software acceleration toolkits from Intel, with similar offerings from Nvidia planned.

Other third-party tools being made available via the Red Hat cloud service include Anaconda Commercial Edition to package, distribute and manage AI models; IBM Watson Studio with AutoAI to build, run and manage AI models; Seldon Deploy to simplify and accelerate deploying, managing and monitoring machine learning models and Starburst Galaxy to unlock the value of data by making it faster and easier to access across a hybrid cloud. Jupyter notebooks and open source frameworks such as Pytorch and Tensorflow are also available.

The bulk of AI applications today are being built using containers. Otherwise, many of these applications would simply be too unwieldy to construct given the volume of data required to train an ML model. Kubernetes platforms, meanwhile, are being used to orchestrate all those containers.

As AI continues to evolve, the degree to which MLOps and DevOps practices will converge is still not clear. Data science teams today typically have defined their own workflow processes using a wide range of graphical tools. However, as it becomes obvious that almost every application is going to be infused with machine learning and deep learning algorithms to some degree, the need to bridge the current divide between DevOps and data science teams will become more acute.

DevOps teams should assume that many more AI models are not only on the way but that those models will need to be continuously updated. Each AI model is constructed based on a set of assumptions; however, as more data becomes available, AI models are subject to drift that results in less accuracy over time. Organizations may even determine that an entire AI model needs to be replaced because the business conditions on which assumptions were made are no longer valid. One way or another, the updating and tuning of AI models is likely to become just another continuous process being managed via a DevOps workflow.

The only question now is the degree to which IT organizations want to manage the platforms that enable AI models to be constructed themselves versus relying on a managed service to outsource the management of those platforms to some other entity.