Docker Extends Reach to Streamline Building of AI Agents

Docker. Inc. today extended its Docker Compose tool for creating container applications to include an ability to now also define architectures for artificial intelligence (AI) agents using YAML files.

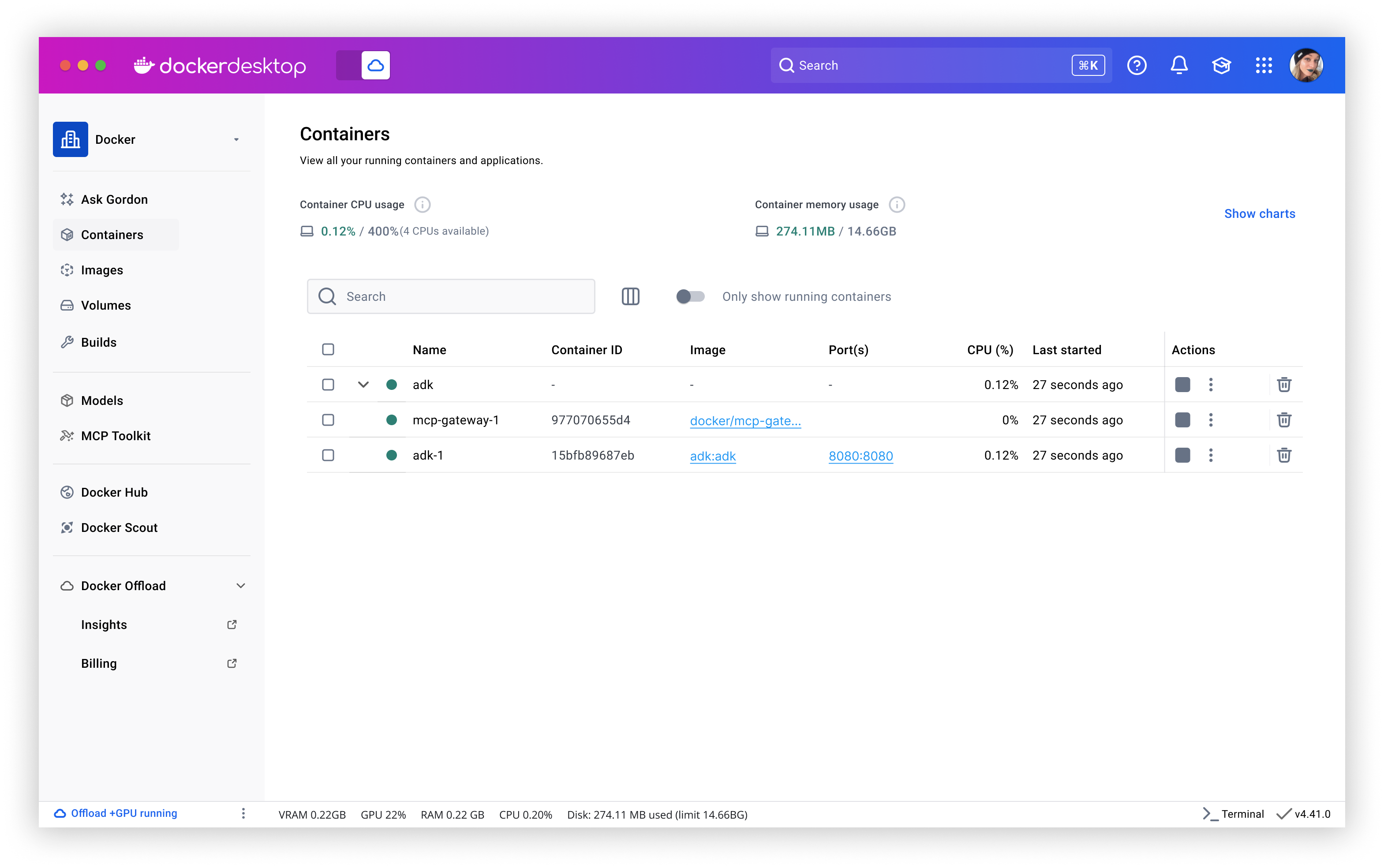

Announced at a WeAreDevelopers World Congress conference, the company also unveiled Docker Offload, a tool available in beta that enables developers from within Docker Desktop to offload the running of AI models to graphic processing units (GPUs) running in cloud services from Google and Microsoft.

Andy Ramirez, SVP, marketing, for Docker, said these extensions to Docker Compose will also make it simpler for application developers to invoke a range of application programming interfaces for building AI applications, including CrewAI, Embabel, LangGraph, Sema4.ai, Spring AI and the Vercel AI software development kit (SDK).

Collectively, these capabilities will make it simpler for millions of application developers to use their existing container tooling to build AI applications that are defined in a single Docker Compose file, said Ramirez. More than 500 customers have already been given access to these tools in a closed beta, he added.

Docker, Inc. has previously added support for a Model Context Protocol (MCP) Gateway through which enables AI agents to communicate seamlessly with various tools and applications. Originally developed by Anthropic, MCP is rapidly emerging as a de facto open standard that enables Docker MCP Catalog, integrated into Docker Hub, to discover, run and manage more than 100 MCP servers.

The company also developed Docker Model Runner, a tool for embedding an AI inference engine into Docker Desktop using an open-source llama.cpp library to make the LLM available locally using the OpenAI application programming interface (API).

It’s not clear at what pace those AI applications are now being built, but it’s already apparent that going forward, most new applications will include some type of AI capability. The overall goal is to streamline the development of these applications using a set of application development tools that many application developers already know, said Ramirez. That approach reduces total costs by minimizing the need to acquire separate tooling to build AI applications, he added.

At the moment, there is a major battle for the hearts and minds of those developers between new entrants and established providers of application development tools. Docker and other incumbents are betting that most application developers would prefer not to learn how to master new tools to build AI applications. However, a Futurum Group survey suggests the application development community is evenly split. Over the next 12 to 18 months, organizations plan to increase spending on not only AI code generation (83%) and agentic AI technologies (76%), but also existing familiar tools that have been augmented with AI, according to a recent Futurum Group survey.

Regardless of approach, the pace at which AI applications will be built and deployed is only going to continue to accelerate. The challenge now given the intense level of competition between organizations, is to enable application developers to build and deploy those applications as fast as possible.