Docker, Inc. Embraces MCP to Make AI Agent Integration Simpler

Docker, Inc., today added support for the Model Context Protocol (MCP) to make it simpler for developers to invoke artificial intelligence (AI) agents using the existing tools they rely on to build container applications.

Nikhil Kaul, vice president of product marketing for Docker, Inc., said the Docker MCP Catalog and Docker MCP Toolkit are the latest AI extensions to the company’s portfolio of application development tools. Earlier this month, Docker made it possible for developers to run large language models (LLMs) on their local machines using a Docker Model Runner extension to Docker Desktop as part of an effort to make it simpler for them to interactively build applications.

That same philosophical approach can now be applied to building AI agents using Docker MCP Catalog and Docker MCP Toolkit, he added.

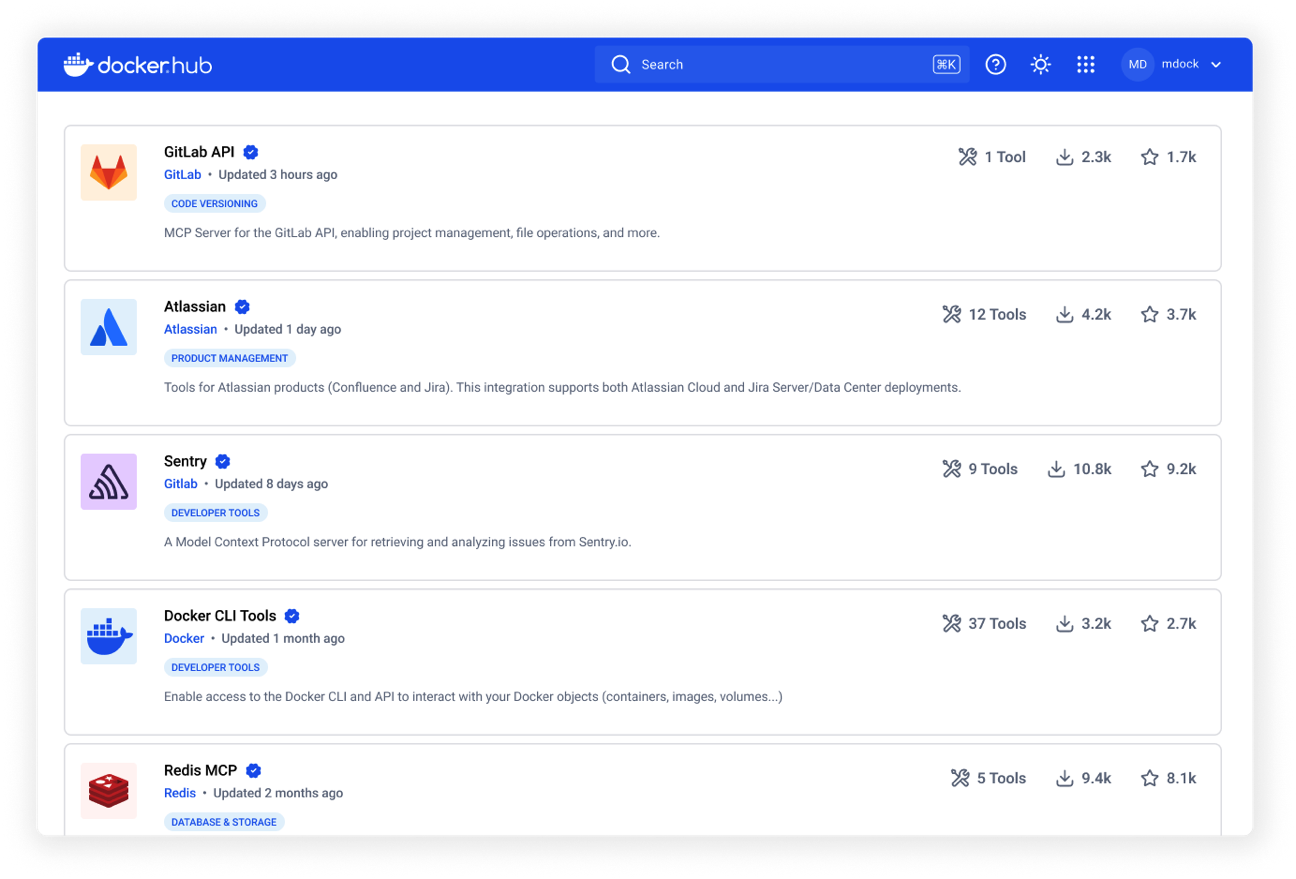

Originally developed by Anthropic, MCP is rapidly emerging as a de facto open standard that enables AI agents to communicate seamlessly with various tools and applications. Docker MCP Catalog, integrated into Docker Hub, gives developers a centralized way to discover, run and manage more than 100 MCP servers from providers such as Grafana Labs, Kong, Inc., Neo4j, Pulumi, Heroku and Elastic Search from within Docker Desktop, noted Kaul.

Future updates to Docker Desktop will also enable application development teams to publish and manage their own MCP servers using controls such as registry access management (RAM) and image access management (IAM), in addition to being able to securely store secrets.

In general, Docker, Inc. is committed to making it possible for application developers to build the next generation of AI applications without having to replace existing tooling, said Kaul. It’s not clear at what pace those AI applications are now being built, but it’s already apparent that going forward, most new applications will include some type of AI capability. It may now be only a matter of time before application developers are invoking multiple MCP servers to create workflows that might span hundreds of AI agents.

The challenge now is making it simpler to build those AI applications without forcing developers to replace tools they already know how to use, said Kaul. The most crucial thing developers need right now is a simple way to experiment with these types of emerging technologies within the context of their existing software development lifecycle, he added.

The pace at which agentic AI applications will be built and deployed will naturally vary from one organization to another. The one thing that is certain going forward is that every application developer will be expected to have some familiarity with the tools and frameworks being used to build AI applications. In fact, application developers who lack those skills may find their future career prospects to be highly limited.

Fortunately, it’s becoming simpler to experiment with these tools and frameworks in a way that doesn’t necessarily require developers to abandon everything they already learned about building modern applications using containers.