The Future of Platform-as-Code is Kubernetes Extensions

Infrastructure-as-Code: Where We Come From

In recent years, since the advent of Docker and the rising popularity of containers, the concept of infrastructure-as-code (IaC) has expanded. What started as APIs to connect concrete infrastructure like (virtual) machines, networking and storage, slowly expanded to include the OS and Kubernetes as well as their configuration and hardening policies. It all depends on your point of view. When you look at modern IaC tools like Terraform, they even support the deployment of workloads.

What has not changed are the reasons people got excited about the as-code movement in the first place. It all boils down to using the familiar tools (editors, CI/CD, etc.) and processes (code reviews, versioning, etc.) used in software development and applying them to the lower layers while at the same time making them descriptive, repeatable, shareable and, last but not least, automatable.

Toward Platform as Code: Where We’re Going

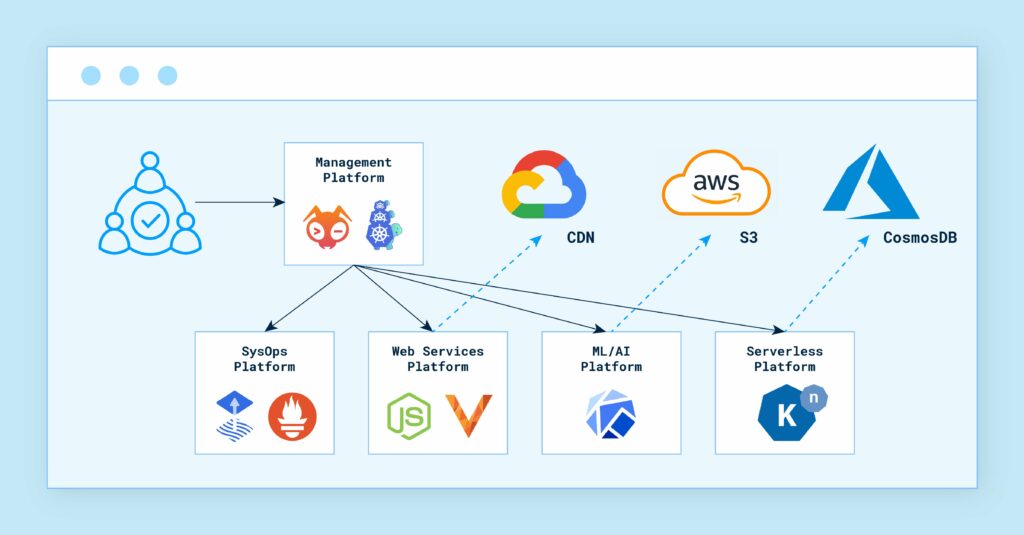

Now, the next step is expanding this concept and its benefits to the platform that we want to offer our developers. The goal is, to build platform-as-a-service-like systems that abstract away the infrastructure and enable engineers to focus on their code. Just like a PaaS, we’d ideally get benefits like self-service, standardization and shared common best practices as well as some type of security and compliance enforcement without having to bother developers.

However, typical PaaS systems have some common pitfalls that we should avoid.

First, the abstractions of a PaaS often result in artificial limitations and, as software and developers grow and mature, they hit more of those limits. Now, with traditional or closed PaaS systems, this leads to exceptions being modeled as (ugly) workarounds. Second, traditional PaaS often comes with high rates of vendor lock-in. Third, we must ask the unpopular question: Is a single platform actually enough? Do your data science engineers need the same platform that your e-commerce team needs?

Kubernetes to the Rescue

“Kubernetes is a platform for building platforms.” You’ve probably heard some version of this statement if you follow Kubernetes thought leaders like Kelsey Hightower or Joe Beda.

Along the same lines, I would propose Kubernetes can actually be the platform of choice for more than just containers. In fact, it can be the one thing we need to finally get to the platform-as-code utopia we envision.

The benefits of Kubernetes—both as an orchestrator and as a unified interface—form the basis of my argument. Kubernetes as an orchestrator brings us its famous reconciliation approach which you could see as a stronger type of the declarative paradigm. It allows for codifying operational knowledge (in custom controllers, aka operators), which is more resilient and future-proof than building this knowledge into scripts of any form. Furthermore, its state is a storage of desire and not a storage of status—the latter being a typical downside to storage and state in typical IaC tools.

Kubernetes, as a unified interface, brings us a common API with built-in features like authentication, rate limiting and audit. Even better, this API has become the standard for cloud-native workload management and, with its native extensibility, the familiarity with the Kubernetes API translates to API extensions. Because of Kubernetes’ success and popularity in recent years, there’s extensive support in tooling from traditional IaC over CI/CD to modern GitOps approaches.

Last but not least, a lot of companies have already been extending the API for many use cases, including getting the first consensus on common abstractions for defining clusters, apps and infrastructure services from within Kubernetes.

Building Blocks for Kubernetes Platforms—Beyond Container Orchestration

First and foremost, we have the Cluster API project which reached production readiness with 1.0. For the uninitiated, Cluster API is an upstream effort towards a consensus API to declaratively manage the life cycle of Kubernetes clusters on any infrastructure. And if that sounds like just an ordinary API to you, rest assured that it includes working implementations of said API to get clusters spawned on many infrastructure providers out there, including the big hyperscalers as well as common on-premises solutions.

Now that we have clusters checked off, next are the applications and workloads in said clusters. For a full-featured cloud-native platform, you’ll want a base set of observability tooling, connectivity tools, tools that compose your developer pipelines and maybe even some additional security tooling or service meshes. Right now, as a community, we can at least agree on Helm as a common packaging format. However, how to actually deploy those Helm charts into clusters, especially in multi-cluster environments (which are becoming more and more common as it gets easier to manage clusters) is still a field where we haven’t reached consensus. If you’ve already jumped on the GitOps bandwagon, tools like Flux CD have some abstractions like the HelmRelease that could help. Giant Swarm developed an open source Kubernetes extension called app-operator that extends Helm, adding multi-cluster functionality as well as multiple levels of overrides for configuration that ease the pain of configuration management in use cases where you deploy fleets of apps into fleets of clusters. It also prepares the way for including more metadata, like test results and compatibility information, into the deployment process.

One other type of resource that we can’t ignore is cloud provider services. Here, we see most of the hyperscalers developing their own native Kubernetes extensions so that you can spawn what they call first-party resources. First-party resources are things like, for example, managed databases directly within Kubernetes that can connect from your cloud-native workloads. Another very interesting approach is that of Crossplane, an open source Kubernetes extension enabling users to assemble services from multiple vendors through the same extension, offering a layer of abstraction that reduces lock-in to the actual provider.

These are just basic extensions; there’s quite a lot of growth in the sector and more and more projects either use Kubernetes under the hood or extend it openly towards their use cases. In the context of building platforms-as-code, it is especially relevant to mention some of the more specific frameworks and extensions that cover specialized but common use cases like MLOps/AI with the Kubeflow project and edge computing with KubeEdge.

Vision and Challenges Going Forward

We are still in the early days of Kubernetes extensions specifically and platform-as-code in general. Most standardization efforts are still in the early stages but are moving rapidly toward consensus and production readiness.

The most important area that we need to address is the user experience of such extensions. This is not limited to just improving the validation and defauls of our APIs, but also with regard to discovery of extensions as well as their level of documentation. Furthermore, once we move closer to production with some of these standards, we as a community need to be careful that we keep the APIs composable and foster interaction without closely coupling systems. Last but not least, debuggability and traceability in complex systems with many Kubernetes extensions is still something that can be improved upon.

One sure thing, however, is that Kubernetes will establish itself as the interface of choice for infrastructure and cloud-native technology. In addition, more standards will be established and more tools will support and integrate with these standards.

In short, my prediction for the future is that Kubernetes will become the standard cloud-native management interface. It isn’t exactly ‘one tool to rule them all,’ but a consensus API that unifies communities. Of course, you can still have freedom to use the tools of your choice, but the unified open source interface guarantees that you won’t get locked in.

With Docker and containers, we created a mindset that workloads are ephemeral. Using the same technologies, we can expand this notion not just to Kubernetes clusters but to our whole developer platform. Or, if you like, the multitude of platforms we’ll offer our users.

Join us for KubeCon + CloudNativeCon Europe 2022 in Valencia, Spain (and virtual) from May 16-20—the first in-person European event in three years!