Kong Updates Service Mesh to Replace Load Balancers

Kong today announced the general availability of version 1.4 of Kong Mesh that the company says could eliminate the need for traditional load balancers in decentralized IT environments running microservices-based applications.

Marco Palladino, CTO, Kong, says the ZeroLB capability will save organizations substantial budget dollars that today are allocated toward centralized load balancers, now largely an antipattern in decentralized IT environments.

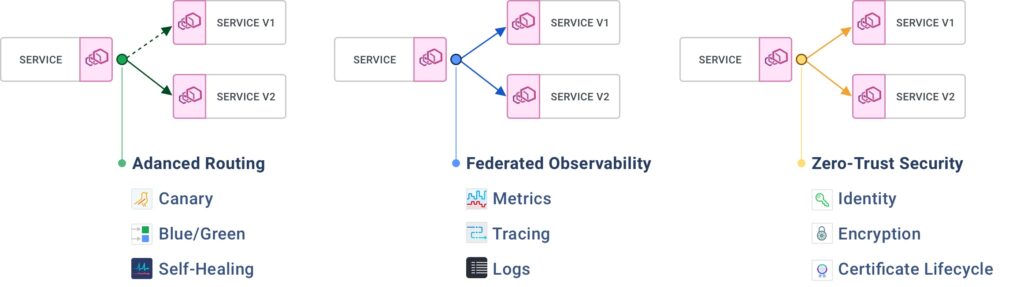

Based on the open source Kuma service mesh that Kong donated to the Cloud Native Computing Foundation (CNCF) and Envoy proxy software, the latest version of Kong Mesh now supports five different decentralized load balancing algorithms, which include round robin, least request, ring hash, maglev and random.

In addition, Kong claims the latest release improves overall network performance by a factor of four compared to a more centralized approach based on traditional load balancers that create extra network hops in decentralized environments. It is also more portable than existing load balancers and provides access to more advanced self-healing capabilities.

While Kong Mesh is designed to be deployed on top of both Kubernetes and traditional virtual machines, it’s mainly decentralized environments with microservices deployed in a Kubernetes environment that will benefit from ZeroLB. In fact, Palladino expects to see other service mesh providers making similar cases to eliminate traditional load balancers in decentralized IT environments.

There’s a lot of debate over which type of service mesh should be employed, but the number of organizations that have deployed one in a production environment remains low. Initially, most organizations will rely on proxy software or an ingress controller to manage a small number of microservices. It’s usually not until a organization has deployed somewhere around 100 microservices and the IT environment becomes more complex that the value of a service mesh becomes apparent.

At the same time, however, interest in reducing costs by eliminating the need to deploy load balancing hardware or software may spur additional demand for service meshes. The challenge is that many IT organizations automatically deploy some type of load balancer simply out of organizational inertia, notes Palladino.

Load balancers, of course, play a critical role in centralized IT environments running monolithic applications, so they won’t be fading away any time soon. Most organizations will wind up running a mix of centralized and decentralized applications for many years to come. However, that doesn’t necessarily mean that platforms and architectures that were designed for legacy IT environments should be carried forward.

The good news is there’s no shortage of service mesh platforms that developers and the IT teams that support them can experiment with. In many cases, IT teams will discover a service mesh presents developers with a layer of abstraction above a tangled web of network protocols that are difficult to navigate. There may even come a day when network operations teams are folded into a larger DevOps team once developers discover they can programmatically invoke any network services by making a simple call through an application programming interface (API) to a service mesh.