5 Key Considerations for Kubernetes in Production

According to a 2020 global survey from the Cloud Native Computing Foundation (CNCF), the use of containers and Kubernetes in production has increased to 92% and 83%, respectively, according to respondents that participated in the survey.

As the use of containers and Kubernetes in production becomes mainstream, enterprises are looking for guardrails to put in place before going live to ensure such environments are reliable and that they follow governance, compliance and security best practices.

To reap the benefits of containers and avoid ending up in a chaotic situation, organizations must carefully plan and prepare before going live. Eventually, as you build more environments and migrate applications, this is going to become easier and will eventually be a non-event.

Production readiness is a methodical process that takes time and gives predictable structure and consistency to your work. Throughout this process, you want to engage your teams to work together and align the business and technical goals of the platform.

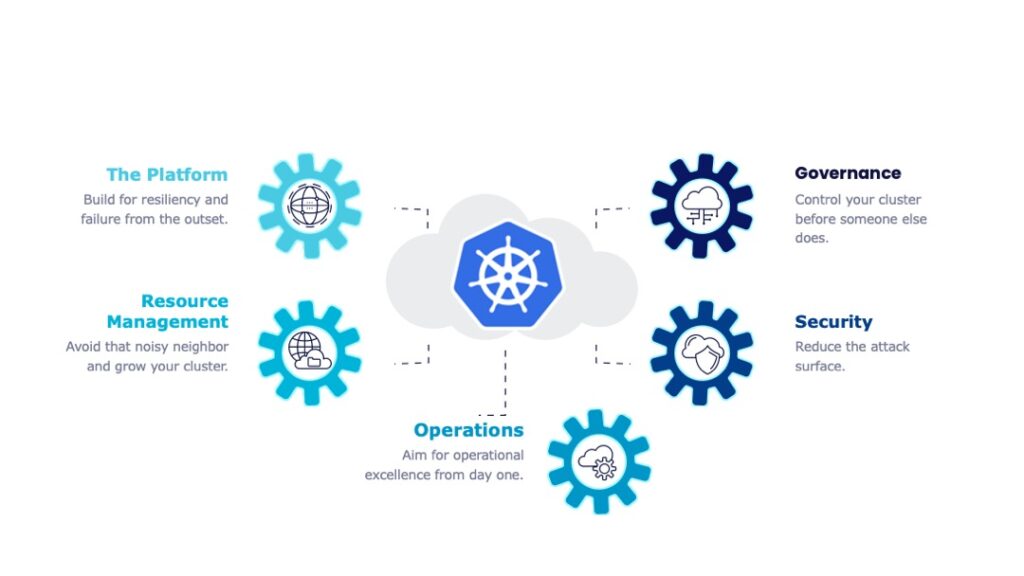

While each organization’s road to production is different, there are common key areas to focus on including the platform itself, resource management, operations, security and governance.

The Platform

Kubernetes is the central component of the platform, but it’s not everything. Integrated components such as storage and container runtime or systems such as CI/CD are also crucial. Taking a product mindset helps to iterate through the different features of the platform instead of focusing on a one-time effort.

Regardless of whether you’re using a managed service on a public cloud or building your own cluster, the platform should be built for resiliency and to protect against failure from the outset.

One way to improve the resiliency of the platform is to formulate and implement an effective disaster recovery plan (DRP). An effective DRP is paramount to be able to restore the platform quickly in case of an outage and maintain any service level agreements (SLAs) in place for the platform or any mission-critical applications.

Another important thing to consider is sizing the cluster properly. You want to carefully consider the types of workloads anticipated (stateful versus stateless, high-performance versus general-purpose, etc.), the number of containers and average daily requests to the Kubernetes API server. It’s better to start with a small number of worker nodes and scale up or out later.

Resource Management

Divide and conquer your workloads by placing them in separate namespaces. This has many benefits. First, it makes access control much easier since you can isolate workloads for different teams, projects or environments and assign permissions accordingly using role-based access control (RBAC). Second, you will be able to assign resources at the namespace level which can be controlled later on using a policy.

Going live with the default parameters in Kubernetes might lead to unknown situations. You want to make sure to use limits for almost everything including but not limited to number of requests handled by the API server, number of pods on a worker node and the upper or lower bounds for pods memory and CPU usage. By default, there are no limits on individual pods; this means a pod can consume all the resources on any worker node it’s scheduled to run on.

If you can’t measure it, you can’t improve it. You want to make sure to monitor the performance of the underlying infrastructure of the platform to better understand your usage patterns over time and scale up or out accordingly.

Operations

Using health checks (readiness and liveness probes) in your application manifests as well as affinity rules to spread pods across data centers or availability zones can increase the overall reliability and availability of your applications.

It’s advisable to use deployments instead of individual pod manifests. This is to ensure applications can survive platform restarts or failures and to easily manage application rollouts in the future.

Ops teams should have an operations playbook that defines the roles and responsibilities of each member of the team. This will help to rapidly triage and resolve issues.

Automation goes hand-in-hand with Kubernetes environments. Failure is almost inevitable in distributed systems, and you want to be prepared to programmatically add, remove or replace nodes in the cluster as quickly as possible and with a minimum of human intervention.

Effective observability is vital in Kubernetes production environments to monitor and measure the user experience, applications, platform, performance and quickly respond to incidents.

This can be done using a mix of whitebox and blackbox monitoring, application performance monitoring (APM), centralized logging and tracing.

Security

There are plenty of things involved in securing Kubernetes applications and platforms. Since cloud-native environments are very dynamic in nature, it’s better to approach security for such environments from different angles (hence, the four pillars of cloud-native security).

At the code level, you want to make sure to minimize severe and high-risk vulnerabilities that you might have in your codebase due to legacy code or open source libraries. Static code analysis can help detect such vulnerabilities early on during the development process and throughout. For example, many DevOps teams were scrambling last year due to the critical Log4j vulnerability that could have been detected with static analysis.

When it comes to containers, always make sure to reduce the attack surface by minimizing the container image file size. The larger the file, the more likely it has vulnerabilities. Also, unless otherwise needed, run rootless containers. This is of paramount importance.

In the Kubernetes cluster, make sure to utilize RBAC and provide the least amount of user privileges possible. This ensures that neither users nor applications gain access that they shouldn’t have. Also, restrict traffic between the different namespaces or pods, as this is open by default.

Your application, platform and code probably reside in a private, public or hybrid cloud. It’s very important to secure that layer by following the security best practices provided by your cloud provider, to follow the principle of least privilege and to continuously monitor for any suspicious activities.

Governance

Some industries operate under particular regulations, such as health care and financial services, and must have certain policies in place, enforced and verified when it comes to Kubernetes environments. For example, having all data encrypted in transit and in flight.

This can be accomplished by building, buying or integrating tools in a governance framework to ensure nothing is slipping through the cracks and the organization is not violating compliance agreements as things change over time.

Open Policy Agent (OPA) provides a centralized policy engine to create, manage and operate policies and is backed by a large open source community. It can be deployed inside the Kubernetes cluster or as a separate service that uses admission controllers to validate API requests.

A wide variety of policies can be written in a policy language called Rego and then used in OPA. For instance, certain image management policies can be written to ensure only images with low- or medium-risk vulnerabilities can go to production.

Also, other open source tools such as Polaris and Trivy can be easily integrated into your CI/CD pipeline to make sure workload configurations are following best practices and scan images for vulnerabilities.

This is not an exhaustive checklist, but rather a reminder and an opportunity to take the time and space to prepare to go live. While checklists and automation can save you time, money and effort in your preparations, the purpose of this exercise is to work with your teams to find out what’s important to you, your end-users and your organization.

For example, a team in a financial services organization might consider performance testing a key area to focus on since they work with high-frequency trading apps and even a slight change in latency can make a big difference.

The idea here is to learn as much as you can throughout this exercise, document your learnings and repeat in the future to better build and operate Kubernetes clusters for production environments.