Red Hat Platform Brings Kafka Closer to Kubernetes

Red Hat this week updated its Red Hat Integration platform for event-driven applications deployed on Kubernetes clusters by incorporating functionality from multiple open source projects to address everything from governance to how data is captured.

At the core of the Red Hat Integration platform is an instance of Apache Kafka, open source streaming processing software that processes data in real-time. The Red Hat implementation of Kafka is based on Strimzi, which Red Hat continues to optimize for Kubernetes clusters. Red Hat is now extending that capability by adding support for Debezium, open source software that makes it possible to capture arbitrary changes to data in real-time.

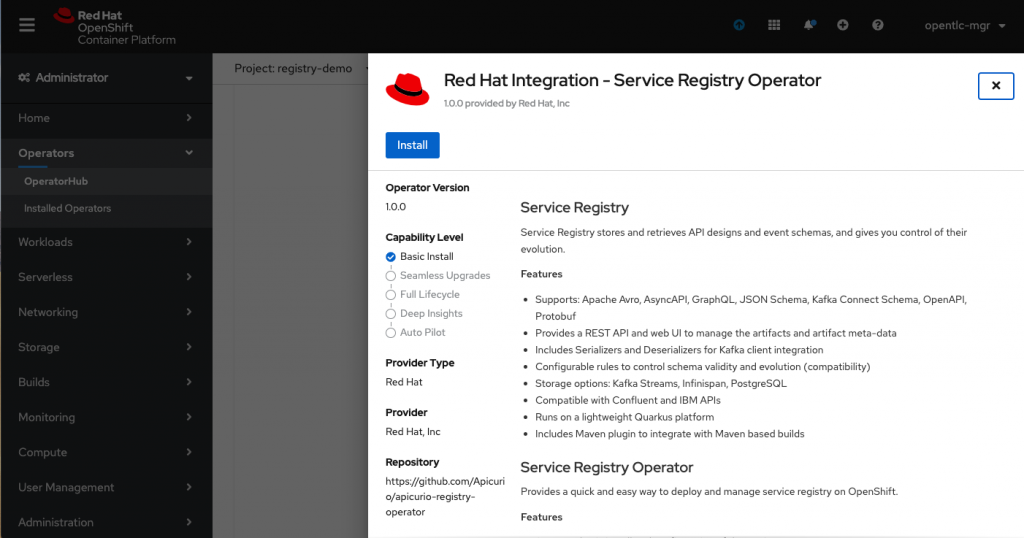

In addition, Red Hat is adding support for a Service Registry based on open source Apicurio project that governs data movement within a Kubernetes environment. The contracts the Service Registry creates provide visibility into the types of messages flowing through the system along with helping to prevent runtime data errors.

Red Hat is also adding support for the latest release of MirrorMaker to its Strimzi implementation of Kafka to make it possible to replicate data streams. MirrorMaker 2.0 adds support for bidirectional replication, topic configuration synchronization and offset mapping.

Finally, Red Hat is adding a technical preview of Apache Camel, open source software that gives developers integration logic in a simplified declaration syntax that can be deployed natively on Kubernetes. It also provides connectivity options to serverless computing frameworks.

Sameer Parulkar, product marketing director for integration products at Red Hat, says that while event-driven applications are not a new concept, they are starting to become more widely embraced as organizations look to automate processes. Microservices-based applications running on Kubernetes clusters are leveraging Kafka software capture data in real-time, which is then employed to automate business processes in near real-time.

IT teams are not necessarily setting out to build event-driven applications, but when it becomes apparent that data needs to be processed and analyzed in real-time, it’s not long before IT teams embrace engines such as Kafka. Running Kafka on Kubernetes makes it easier to scale the consumption of IT infrastructure resources up and down as needed.

The rise of event-driven applications doesn’t necessarily mean legacy batch-mode applications are going away anytime soon. However, it does mean application environments are becoming more varied and complex, says Parulkar.

Red Hat is trying to reduce that complexity by providing an integration platform based on open source software it curates. In theory, IT teams could build their own integration platforms using open source software. However, Red Hat is betting most organizations will prefer to consume those projects via one integration platform.

It may be a while before event-driven applications are deployed more pervasively across the enterprise. It requires a fair amount of expertise to build, deploy and maintain these applications. Nevertheless, it’s clear that after several decades of being around in one form or another, the time for event-driven applications in mainstream IT environments has finally arrived.