Buoyant Adds Proprietary Enterprise Edition of Linkerd Service Mesh

Buoyant today revealed it is making available a curated enterprise edition of the open source Linkerd service mesh.

Buoyant CEO William Morgan said in addition to providing ongoing support for any patches to vulnerabilities to hardened images that might be required, the proprietary Linkerd Enterprise offering also makes available capabilities that are not available in other editions of Linkerd.

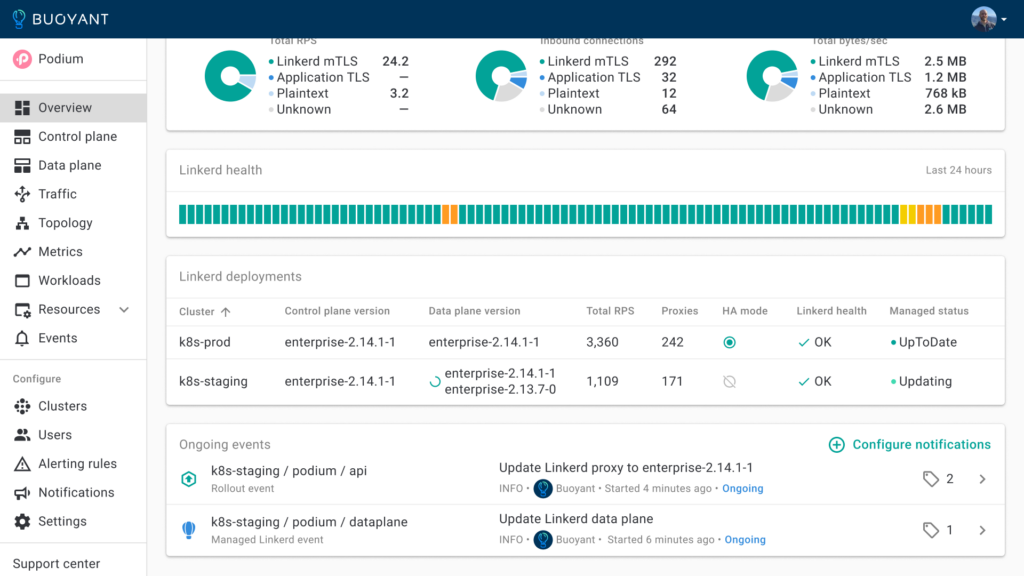

Those capabilities include tools to ensure identity-based zero-trust policies are implemented and maintained at scale, a load balancer to optimize consumption of underlying infrastructure resources, life cycle automation tools to simplify deployments and rollbacks and compliance with the FIPS 140-2 cryptography standard.

The overall goal is to increase the level of reliability that can be achieved when deploying Linkerd in an enterprise IT environment, said Morgan.

Buoyant, in the meantime, remains committed to the open source edition of Linkerd that is today being advanced under the auspices of the Cloud Native Computing Foundation (CNCF) alongside multiple other open source service meshes. But some enterprise customers made it clear they wanted a curated edition of the platform supported by Buoyant, said Morgan. There are no plans to change the licensing terms under which the open source edition of Linkerd is offered, he added.

For several years now, Buoyant has been making a case for a lighter-weight service mesh for Kubernetes clusters to manage application programming interfaces (APIs) that is simpler to implement than rival approaches. That approach substantially reduces the amount of cognitive overhead that would otherwise be required to deploy and maintain a service mesh, noted Morgan.

It’s not clear how quickly enterprise IT organizations are embracing service meshes but as more cloud-native applications based on microservices are deployed, each with their own set of APIs, the need for a service mesh becomes more apparent. It’s not uncommon for some IT teams today to find they now need to manage hundreds, perhaps even thousands, of APIs.

In addition to making it simpler to manage APIs at scale, a service mesh also provides IT teams with an abstraction layer that simplifies networking and security management across multiple Kubernetes clusters. That capability provides the added benefit of making it easier to converge network, security and DevOps workflows.

The issue that many enterprise IT teams will need to determine is whether the service mesh will be managed by a DevOps team alongside the rest of a cloud-native application environment or whether a networking team will assume responsibility for managing the service mesh alongside other connectivity platforms. Regardless of approach, a service mesh creates an opportunity to better integrate roles and responsibilities within an enterprise IT environment.

Many organizations may even find, over time, that they have multiple service meshes in place implemented by different teams. In other cases, organizations may decide an API gateway meets their current level of application connectivity requirements for the immediate future. One way or another, however, as managing connectivity within and across Kubernetes clusters becomes more challenging as more cloud-native applications are deployed, the need for a service mesh will become increasingly more pronounced.