Securing AI Agents With Docker MCP and cagent: Building Trust in Cloud-Native Workflows

Artificial intelligence (AI) is beginning to appear everywhere in software development. From writing tests to scanning images for vulnerabilities, AI agents are becoming silent coworkers. They can pull data, analyze logs and even trigger builds without human help.

But letting an AI agent touch production systems raises an old question with a new twist:

How do we trust what it does?

Docker’s new model context protocol (MCP) and cagent toolkit offer one possible answer. They enable developers to create and execute AI agents in a safe and secure way within containers, with clearly defined permissions and isolation.

This article describes the integration of these tools with a cloud-native workflow and security best practices that developers can adhere to in order to utilize them responsibly.

Why AI Agents Need Guardrails

AI agents act on behalf of users. They can talk to APIs, analyze data or modify files. If they’re misconfigured, a simple prompt like ‘clean up unused images’ might accidentally delete something critical.

Traditional APIs and scripts have already faced these risks but AI makes it easier for mistakes to scale rapidly. Agents can act autonomously and chain actions across systems. Without boundaries, one error or malicious instruction can ripple across an entire cluster.

The goal of Docker MCP and cagent is to bring structure and safety to how these agents operate. They let you define exactly what an agent can do and where it can do it — all using familiar container tools.

Understanding Docker MCP

The MCP is an open standard that defines the interaction between AI models, tools and data sources.

Instead of giving an agent direct access to your Docker host or APIs, you expose those capabilities through small, isolated MCP servers. Each MCP server runs in a container and handles a narrow task, for example, listing container images, checking policy compliance or fetching logs.

An AI agent sends a structured request to the MCP server when it intends to act. The server decides on whether it is allowed or not and sends the result back. The agent never touches the system directly.

Think of MCP as a firewall for AI agents. It mediates their actions and reduces the ‘blast radius’ if something goes wrong.

Example:

# Run a simple MCP server that exposes Docker Hub data

docker run –rm -p 4000:4000 ghcr.io/docker/mcp-dockerhub:latest

The agent can now safely query container metadata through this MCP server instead of hitting the Docker Hub API directly.

Building Agents With cagent

cagent is a small open-source tool that makes it easy to build and run AI agents that use MCP servers.

You describe your agent in a YAML file, specifying the model it should use, the MCP tools it can access and the tasks it is expected to perform. Then you run it like any other container.

Example agent.yaml:

agent:

name: image-linter

model: local:llama3 tools:

- name: dockerhub-mcp

endpoint: http://localhost:4000

goals:

- “Find outdated container images and suggest updates”

To run the agent:

docker run -v $(pwd)/agent.yaml:/agent.yaml ghcr.io/docker/cagent:latest

Behind the scenes:

- cagent spins up a local model (via Model Runner) or connects to a remote one

- The agent sends tasks to defined MCP endpoints

- Each endpoint runs in a sandboxed container

The result:

Fully reproducible, isolated agent workflows.

Why This Matters for Security

AI agents amplify both productivity and risk. By treating each tool or API as an MCP server, we reintroduce an important security principle: Least privilege.

Instead of giving an AI model root access, we allow only the smallest set of capabilities it needs to function.

Without MCP, an over-eager agent might:

- Delete production images during ‘cleanup’

- Leak environment secrets while reading logs

- Execute arbitrary commands through injected prompts

With MCP:

- All requests must go through a known endpoint

- Each endpoint runs in its own container with defined permissions

- Logs and responses are traceable and auditable

In short, MCP makes agent behavior observable and bounded — a rare thing in today’s AI landscape.

Common Security Pitfalls and How to Prevent Them

Even with isolation, AI workflows may give rise to novel attack paths. The following are some of the pitfalls and ways to avoid them:

|

Risk |

Example |

Mitigation |

|

Tool poisoning |

Using unverified MCP images from public sources |

Always use signed or verified images; prefer minimal base images |

|

Prompt injection |

Agent tricked into running harmful commands |

Sanitize input; use static command templates rather than raw string substitution |

|

Over-permissioned containers |

MCP server runs with –privileged or mounts /var/run/docker.sock |

Avoid privileged mode; never expose host Docker socket |

|

Supply chain drift |

MCP server image updated silently |

Pin image digests (docker pull ghcr.io/…@sha256:abc123) |

|

Unlogged actions |

Agent performs steps without audit |

Log every MCP request; store results for review |

Security in AI workflows is not about a singular setting — it’s about layered controls.

Isolating MCP Servers for Safer Execution

Isolation is the heart of Docker’s security model. You can apply the same principle to MCP servers.

Example:

docker run \

–read-only \

–network none \

–cap-drop ALL \ ghcr.io/docker/mcp-dockerhub:latest

This command:

- Runs the container in read-only mode (no file writes)

- Removes all Linux capabilities

- Cuts off all outbound network access

It’s a clean, simple sandbox. If an agent tries to do more than it should, it can’t. For production use, combine this with:

- Signed container verification (Cosign, Notary v2)

- Secrets injection via Vault or Google Cloud Platform (GCP) Secret Manager

- Continuous monitoring with container runtime tools such as Falco or Open Policy Agent (OPA)

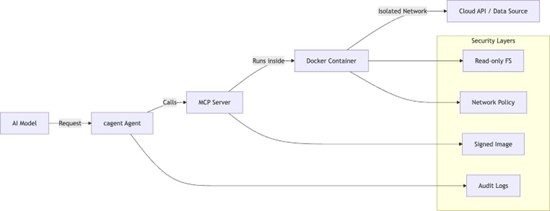

Visualizing the Secure Agent Workflow

Figure 1. Secure AI Agent Workflow with Docker MCP and cagent:

The diagram shows how an AI model communicates through a cagent-based agent, which calls an MCP server running inside an isolated Docker container. Each layer adds signing, network and auditing controls to ensure safe execution.

Practical Use Cases

Practitioners can start using MCP and cagent for real-world tasks today:

- Policy Checking: Run an MCP server that enforces Kubernetes or Terraform policies before deployment

- Image Hygiene: Use agents to scan registries for outdated or vulnerable base images

- DevSecOps Chatbot: Build an internal chatbot that fetches logs, scans configs and reports issues—without full cluster access

- Offline AI Workflows: Use Docker Model Runner to execute local inference securely without exposing sensitive data to external APIs

Each of these use cases reuses the same secure pattern: Agent → MCP server → isolated container → controlled output.

Lessons From DevOps: AgentOps Needs Guardrails

Over the years, DevOps teams have learned that automation without guardrails leads to chaos. To address the risk, tools such as continuous integration/continuous delivery (CI/CD) pipelines, role-based access control and immutable builds were created.

Now, as we move into the AgentOps era — where AI drives parts of development and operations — those lessons apply again.

Containers and MCP servers give us a way to enforce discipline:

- Define what an agent can do

- Contain how it runs

- Observe every action

These are not abstract concepts, but they are the same principles that drive Kubernetes, CI/CD and DevSecOps.

Looking Ahead: A Secure Future for AI Agents

AI agents won’t replace developers but they will change how we build, test and operate software. Docker’s MCP and cagent show that it’s possible to mix automation and accountability in one framework.

The best part? Everything runs inside containers you already know how to secure.

If we treat each AI agent like a microservice — versioned, monitored and sandboxed — we can scale safely. The next generation of secure, cloud-native systems will not only execute containers; it will trust containers to keep their AI coworkers honest.

Key Takeaways

- Use Docker MCP to give AI agents limited, auditable access to tools

- Define agents declaratively with cagent

- Apply container isolation, signing and network limits to MCP servers

- Treat agents as part of your CI/CD security boundary

- Always log, monitor and review AI actions — just like any other code change