Automating Kubernetes Cleanup in CI Workflows

Continuous integration (CI) practices are now mainstream and have significantly increased deployment frequency while decreasing defect rates for organizations worldwide. This boosts mean time to repair (MTTR), market responsiveness, risk reduction, software quality and the developer experience. Traditionally, testing relied on general-purpose VMs, leading to complex workflows and resource management headaches. As CI adoption increases and Kubernetes becomes the standard for container orchestration, enterprises are using Kubernetes to automate CI pipelines. However, there are clear challenges around cost, resource allocation, security and maintenance when using this approach. This article explores these hurdles and explains how to overcome them for a truly robust pipeline.

The Difficulties of Persistent Kubernetes Environments

Kubernetes offers a declarative interface, programmable API and self-healing features, making it invaluable for CI/CD. Many organizations default to persistent Kubernetes environments for their CI/CD pipelines due to their simplicity. However, pre-installed toolchains and always-on clusters introduce challenges that can undermine Kubernetes’ benefits:

Security Risks: Long-running environments make access control complex, leading to excessive privileges and increased risk. Not all pipelines need the same access, but permissive controls can let less privileged teams run vulnerable images, threatening the entire cluster. Granting developers near-admin access for debugging further complicates security, especially in shared infrastructures.

Cost Overheads: Resources often remain allocated during downtime, wasting compute, memory and bandwidth. Idle clusters and unattached volumes drive up costs, frustrating FinOps as pipelines scale.

Maintenance Burden: Multiple teams increase the risk of configuration drift, breaking pipelines and leaving hanging resources that disrupt others, requiring regular cleanup and increasing the maintenance overhead.

CRD Challenges: Custom resource definitions (CRDs) extend Kubernetes but complicate pipeline segregation since CRDs are cluster-wide. Testing conflicting CRDs requires additional clusters, adding operational overhead, while deleting old ones can disrupt other teams.

Ephemeral Environments as a Solution

Ephemeral environments are ‘short-lived’, spinning up with your pipeline and deleted when finished. This approach helps teams reduce resource waste and boost security, representing a new strategy for optimal workflow.

The simplest way to create ephemeral environments is to spin up a new cluster for each pipeline. However, provisioning clusters and installing operators can be time-consuming. However, virtual clusters with auto-cleanup features address these challenges:

Virtual Clusters for Ephemeral Environments

Open-source tools such as vCluster let you create virtual Kubernetes clusters within a host cluster, providing isolated environments for each pipeline in seconds without requiring physical clusters every time. This means faster provisioning, improved cost savings and automatic resource cleanup.

Auto Cleanup for CI

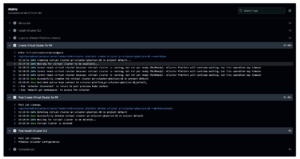

As an example, if you use GitHub Actions and vCluster for your CI Infrastructure, you can add the auto-clean parameter by setting ‘auto-cleanup: true’ to automatically remove unused clusters.

During the pipeline lifecycle, you will see cluster creation, task execution and cluster deletion, reducing costs given your host cluster can use cluster autoscaler to scale the infrastructure down when no virtual clusters or pipelines are running.

Kubernetes Custom Resources

The other advantage of virtual clusters is syncing resources between the host and virtual environments. You can quickly import objects such as Istio or Secret Operators from the host, skipping lengthy installations and saving time.

For example, if you want to use ingress in your pipeline, you don’t need to create all the ingress controllers, resources and classes; you can just sync them from the host alongside the following objects:

- CustomResources

- Nodes

- IngressClasses

- StorageClasses

- CSINodes

- CSIDrivers

- CSIStorageCapacities

- ConfigMaps

- Secrets

- Events

Syncing can also work in reverse, letting you push resources from your virtual cluster back to the host cluster using the toHost option. Integrations for tools such as Istio and cert-manager work seamlessly across both environments, and patches lets you control which fields to edit, exclude or override during syncing.

Lastly, vCluster enforces isolation at the CRD level, ensuring that any pipeline you’re running won’t interfere with others, despite being on the same host cluster.

Improved DevEx

Virtual clusters enable developers to rapidly spin up environments in minutes, letting them focus on building rather than waiting for resources. Direct cluster access enables efficient debugging without special admin permissions, making teams more self-sufficient.

Happy Security Teams

Security teams are especially pleased with virtual clusters because they allow organizations to integrate with their own single sign-on (SSO) systems, ensuring robust authentication and streamlined user management. This integration means teams can leverage their existing identity providers to control access, mapping users and groups directly to cluster-level privileges using Kubernetes role-based access control (RBAC), without exposing or risking the underlying host cluster.

Within vCluster, users have admin-level access to manage resources, debug and configure policies independently, but their permissions on the host cluster remain strictly minimized. This strong isolation ensures that even if a user has full control over their virtual cluster, they cannot compromise or alter the security or configuration of the host infrastructure. Security teams value this architecture because it enforces the principle of least privilege, reduces the risk of privileged access misuse and maintains a clear separation between tenant and host environments, significantly lowering the potential attack surface and safeguarding core infrastructure assets.

Conclusion

Adopting ephemeral environments is a proven strategy for accelerating development, improving security and reducing operational overhead. Teams can spin up isolated Kubernetes environments on demand, making development and testing smoother and more efficient. Moreover, developers no longer have to worry about resource conflicts or waiting for shared environments, and everything from CI integration to cleanup became much simpler