StormForge Makes ML for Kubernetes Clusters Accessible

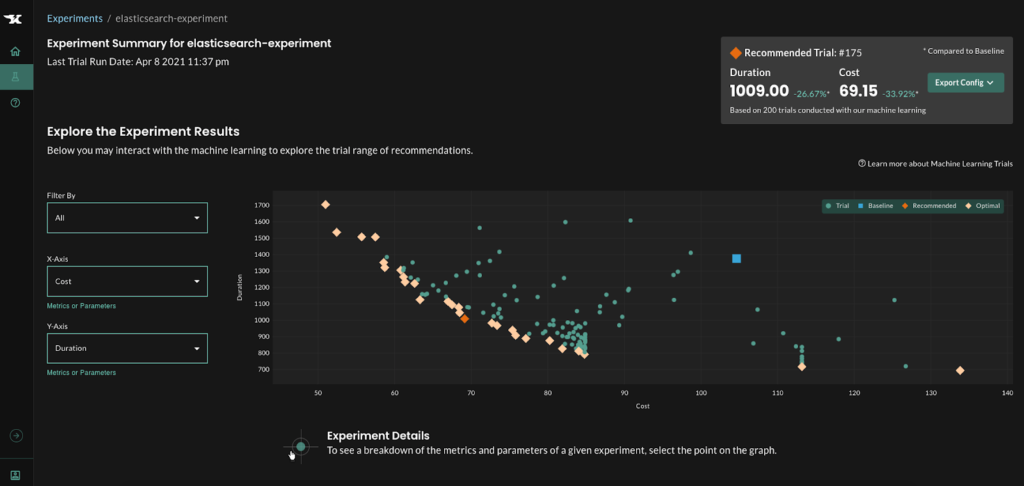

At the online KubeCon + CloudNativeCon Europe 2021 conference, StormForge today extended a platform that employs agent software and machine learning algorithms to remove the guesswork from deploying applications on Kubernetes clusters by adding the ability to automatically generate experiments.

In addition, the company has revamped its namesake platform to make it more accessible to Kubernetes novices, and now includes guided walkthroughs for setting up and running the experiments.

John Platt, vice president of machine learning for StormForge, says those capabilities reduce the need for IT teams to guess how many resources to make available to any given applications. Most IT environments today incur unnecessary expense because IT teams routinely overprovision IT infrastructure to make sure no application is starved for resources, notes Platt.

Over time, however, the cost of all that additional provisioning of IT infrastructure in the cloud and on-premises IT environments adds up, noted Platt. A recent StormForge survey of 105 IT professionals in North America finds that nearly half of cloud resources spending (48%) is wasted. Reducing that waste is, to varying degrees, a priority for three-quarters of organizations (76%), with a third (33%) citing it as a high priority.

The survey also finds roughly three-quarters of respondents are already running Kubernetes clusters, with just under half running 11 or more. A third of those respondents (33%) said they rely on the default settings from cloud service providers to size their clusters, while just under a third (31%) rely on a trial-and-error approach. Surprisingly, just over a quarter (27%) said they are already relying on machine learning algorithms to properly size Kubernetes clusters.

In general, StormForge is making a concerted effort to reduce the level of expertise required to successfully deploy a Kubernetes application. Kubernetes is simultaneously one of the most powerful and complex platforms any IT organization can deploy. In many cases, all the settings that need to be mastered are simply too intimidating for the average IT administrator, which Platt notes results in not as many Kubernetes clusters being employed in production environments as there might be.

As IT environments become more complex the number of IT organizations that are relying on machine learning algorithms to optimize an IT environment is only going to steadily increase. Given its inherent complexity, Kubernetes clusters will be among the first platforms to which machine learning algorithms will be applied to compensate for a general shortage of IT expertise. Those machine learning algorithms won’t replace the need for IT professionals, but it will become increasingly difficult for IT teams to manage complex IT environments without them. Most of the tasks being automated by machine learning algorithms are functions that human beings are not able to do anyway, notes Platt.

It’s unclear how quickly IT teams will embrace machine learning and other forms of AI operations (AIOps), but there is a strong correlation between usage of Kubernetes and reliance on DevOps best practices for managing IT that, at their, core seek to ruthlessly automate every IT process possible. As such, DevOps practitioners will undoubtedly be in the vanguard of the AIOps revolution.