MetalBear Simplifies Testing of CI Pipelines on Kubernetes Staging Servers

MetalBear this week added an ability to mirror a continuous integration (CI) pipeline to its mirrord platform that gives application developers access to a staging server in a cloud computing environment that more closely resembles the actual production environment.

Company CTO Eyal Bukchin said mirrord for CI will enable developers to run concurrent CI tests in a shared Kubernetes environment rather than requiring developers to deploy and maintain an instance of Kubernetes on their local machine that doesn’t accurately reflect the production environment.

Alternatively, application developers can set up a Kubernetes cluster in a cloud service, but that can require 20 to 30 minutes for the environment to spin up, container images to build, and then be deployed. However, it’s not likely those environments will accurately reflect a production environment because they typically have limited access to data, third-party application programming interfaces (APIs) and microservices, noted Bukchin.

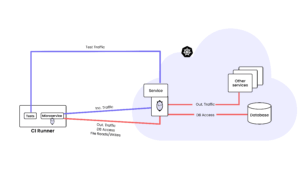

In contrast, mirrord was created to provide access to a staging cluster running at the process level to enable software engineers to more easily review changes and updates in an environment that more closely resembles the clusters running in the production environment, said Bukchin. That capability is now being extended to include pipelines using a CI runner that creates proxies for incoming and outgoing traffic, environment variables, and files moving back and forth between it and the cluster.

Capabilities such as HTTP traffic filtering, database branching, and queue splitting ensure that CI runners’ traffic and data are isolated, without affecting other runners or developers using the shared environment. Developers can also invoke a mirrord Policies tool to apply guardrails that prevent unsafe operations on the shared cluster.

Collectively, those capabilities enable a developer to test a CI pipeline against real services, real data, and real traffic to save 20 to 25 minutes per CI run without having to spin up a cloud service or deploy a local instance of a Kubernetes cluster where code is created but then fails to run in the production environment, noted Bukchin.

That issue will become even more problematic as organizations start to rely more on AI coding tools to build and test cloud-native applications running on Kubernetes clusters, he added.

While many developers still find Kubernetes environments challenging, a survey of 628 IT professionals conducted by the Cloud Native Computing Foundation (CNCF) finds 59% of respondents reporting that “much” or “nearly all” of their development and deployment is now cloud native, with 82% running Kubernetes clusters in production environments.

Similarly, a global survey of 828 enterprise IT leaders conducted by the Futurum Group finds 66% of respondents reporting their organization is using Kubernetes for internal business software, compared to 48% using it to deploy customer-facing services.

No matter how many applications are deployed on Kubernetes clusters, however, the one thing that is certain is that testing them before they are deployed in many instances can be made a lot simpler. In fact, as it becomes easier to test code in those environments one of the primary objections many developers have about building cloud-native applications can be finally addressed.